Kubernetes is the de-facto platform to automate the management, deployment, and scaling of containerized applications. One of its strongest features is its ability to scale, allowing users to customize the system according to their needs. A key mechanism for this extensibility is Custom Resource Definitions (CRD).

What are CRDs? Link to heading

CRDs allow Kubernetes users to create and manage their own custom resources. A Custom Resource (CR) is essentially a Kubernetes resource that does not exist by default but is defined via a CRD.

In other words, CRDs allow the creation of new resource types that Kubernetes doesn’t know about a priori. These resources behave like Kubernetes’ built-in resources (such as Pods, Services, Deployments), but their structure and functionality are determined by the CRD you create.

How do CRDs Work? Link to heading

When we define a CRD, Kubernetes extends its API to handle the new resource type. Once a CRD is registered with the Kubernetes API Server, we can create instances of the custom resource just like we would with native resources. The API server will store these objects in Kubernetes’ distributed key-value store, etcd, and manage their lifecycle.

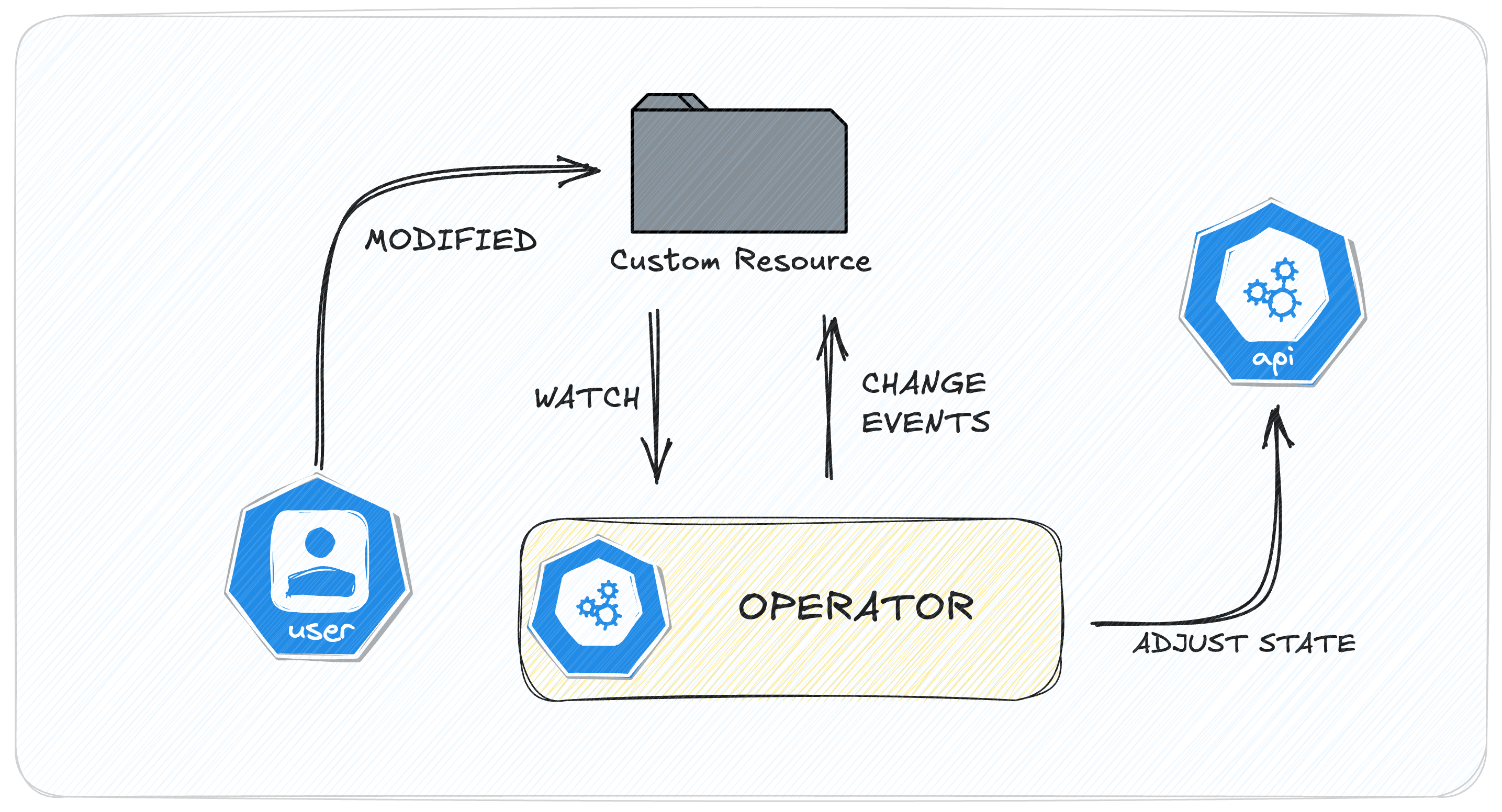

Figure 1: Generic K8s Operator

For example, after defining a MyApp CRD, we can create MyApp resources in our cluster, just like we would create Pods or Deployments. There are tools available to facilitate the definition, setup, and management of CRDs and their accompanying components. Such a tool is KubeBuilder.

What is KubeBuilder? Link to heading

Kubebuilder is a powerful framework that makes it easy to build and manage Kubernetes operators and APIs. Developed by the Kubernetes team at Google, it is widely recognized as a popular tool among developers working with Kubernetes.

Basic features Link to heading

- Building Operators: Kubebuilder provides a simple and efficient process for building, deploying, and testing Kubernetes operators.

- Building APIs: With Kubebuilder, users can build custom Kubernetes APIs and resources, allowing them to extend Kubernetes with their own functions and resources.

- Based on Go: Kubebuilder is written in Go and uses Kubernetes’ Go SDK, providing seamless integration with the Go ecosystem.

Now let’s dive into the process of creating our first custom Kubernetes controller using KubeBuilder.

Creating Custom Kubernetes Controller with KubeBuilder Link to heading

To get hands-on with KubeBuilder, we’ll create a custom resource called MyApp. This resource represents a simple application comprising multiple pods. We’ll also build a Kubernetes controller to manage the lifecycle of these MyApp instances, ensuring the desired state of your application is maintained within the cluster.

Prerequisites Link to heading

- Go version v1.20.0+

- Docker version 17.03+.

- Access to a Kubernetes v1.11.3+ cluster.

Installation Link to heading

Let’s install KubeBuilder:

1curl -L -o kubebuilder "https://go.kubebuilder.io/dl/latest/$(go env GOOS)/$(go env GOARCH)"

2chmod +x kubebuilder && sudo mv kubebuilder /usr/local/bin/

Create a project Link to heading

First, let’s create and navigate into a directory for our project. Then, we’ll initialize it using KubeBuilder:

1export GOPATH=$PWD

2echo $GOPATH

3mkdir $GOPATH/operator

4cd $GOPATH/operator

5go mod init nbfc.io

6kubebuilder init --domain=nbfc.io

Create a new API Link to heading

1# kubebuilder create api --group application --version v1alpha1 --kind MyApp --image=ubuntu:latest --image-container-command="sleep,infinity" --image-container-port="22" --run-as-user="1001" --plugins="deploy-image/v1-alpha" --make=false

This command creates a new Kubernetes API with these parameters:

- –group application: Defines the API group name.

- –version v1alpha1: The API version.

- –kind MyApp: The name of custom resource.

- –image=ubuntu:latest: The default container image to use

- –image-container-port=“22”: Exposes port 22 on the container

- –run-as-user=“1001”: Sets the user ID for running the container

- –plugins=“deploy-image/v1-alpha”: Uses the deploy-image plugin

We use these parameters to create a custom resource that includes all necessary deployment settings — such as container image, port, and run-as-user — making it more manageable and production-ready within our Kubernetes environment. By using the deploy-image plugin, we achieve optimal customization for our CR without needing further deployment modifications.

Install the CRDs into the cluster Link to heading

1make install

For quick feedback and code-level debugging, let’s run our controller:

1make run

Create an Application CRD with a custom controller Link to heading

In this section we build an application description as a CRD, along with its accompanying controller that performs simple operations upon spawning. The application consists of a couple of pods and the controller creates services according to the exposed container ports. The rationale is to showcase how easy it is to define logic that accompanies the spawning of pods.

To create custom pods using the controller, we need to modify the following files:

myapp_controller.gomyapp_controller_test.goapplication_v1alpha1_myapp.yamlmyapp_types.go

1.

2|-- Dockerfile

3|-- Makefile

4|-- PROJECT

5|-- README.md

6|-- api

7| `-- v1alpha1

8| |-- groupversion_info.go

9| `-- myapp_types.go

10|-- bin

11| |-- controller-gen -> /root/operator/bin/controller-gen-v0.16.1

12| |-- controller-gen-v0.16.1

13| |-- kustomize -> /root/operator/bin/kustomize-v5.4.3

14| `-- kustomize-v5.4.3

15|-- cmd

16| `-- main.go

17|-- config

18| |-- crd

19| | |-- bases

20| | | `-- application.nbfc.io_myapps.yaml

21| | |-- kustomization.yaml

22| | `-- kustomizeconfig.yaml

23| |-- default

24| | |-- kustomization.yaml

25| | |-- manager_metrics_patch.yaml

26| | `-- metrics_service.yaml

27| |-- manager

28| | |-- kustomization.yaml

29| | `-- manager.yaml

30| |-- network-policy

31| | |-- allow-metrics-traffic.yaml

32| | `-- kustomization.yaml

33| |-- prometheus

34| | |-- kustomization.yaml

35| | `-- monitor.yaml

36| |-- rbac

37| | |-- kustomization.yaml

38| | |-- leader_election_role.yaml

39| | |-- leader_election_role_binding.yaml

40| | |-- metrics_auth_role.yaml

41| | |-- metrics_auth_role_binding.yaml

42| | |-- metrics_reader_role.yaml

43| | |-- myapp_editor_role.yaml

44| | |-- myapp_viewer_role.yaml

45| | |-- role.yaml

46| | |-- role_binding.yaml

47| | `-- service_account.yaml

48| `-- samples

49| |-- application_v1alpha1_myapp.yaml

50| `-- kustomization.yaml

51|-- go.mod

52|-- go.sum

53|-- hack

54| `-- boilerplate.go.txt

55|-- internal

56| `-- controller

57| |-- myapp_controller.go

58| |-- myapp_controller_test.go

59| `-- suite_test.go

60`-- test

61 |-- e2e

62 | |-- e2e_suite_test.go

63 | `-- e2e_test.go

64 `-- utils

65 `-- utils.go

Modify myapp_controller.go

Link to heading

This file contains the core logic of our custom controller, where we implement the reconciliation logic to manage the lifecycle of MyApp resources. Specifically, the controller logic:

- Monitors MyApp resources for any changes

- Creates, updates, or deletes Pods based on the MyApp specifications

- Manages Services to ensure connectivity for the Pods

- Handles resource cleanup when MyApp resources are deleted

1package controller

2

3import (

4 "context"

5 "fmt"

6

7 corev1 "k8s.io/api/core/v1"

8 apierrors "k8s.io/apimachinery/pkg/api/errors"

9 "k8s.io/apimachinery/pkg/api/meta"

10 metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

11 "k8s.io/apimachinery/pkg/runtime"

12 "k8s.io/apimachinery/pkg/types"

13 "k8s.io/apimachinery/pkg/util/intstr"

14 "k8s.io/client-go/tools/record"

15 applicationv1alpha1 "nbfc.io/api/v1alpha1"

16 ctrl "sigs.k8s.io/controller-runtime"

17 "sigs.k8s.io/controller-runtime/pkg/client"

18 "sigs.k8s.io/controller-runtime/pkg/controller/controllerutil"

19 "sigs.k8s.io/controller-runtime/pkg/log"

20)

21

22const myappFinalizer = "application.nbfc.io/finalizer"

23

24// Definitions to manage status conditions

25const (

26 typeAvailableMyApp = "Available"

27 typeDegradedMyApp = "Degraded"

28)

29

30// MyAppReconciler reconciles a MyApp object

31type MyAppReconciler struct {

32 client.Client

33 Scheme *runtime.Scheme

34 Recorder record.EventRecorder

35}

36

37//+kubebuilder:rbac:groups=application.nbfc.io,resources=myapps,verbs=get;list;watch;create;update;patch;delete

38//+kubebuilder:rbac:groups=application.nbfc.io,resources=myapps/status,verbs=get;update;patch

39//+kubebuilder:rbac:groups=application.nbfc.io,resources=myapps/finalizers,verbs=update

40//+kubebuilder:rbac:groups=core,resources=events,verbs=create;patch

41//+kubebuilder:rbac:groups=core,resources=pods,verbs=get;list;watch;create;update;patch;delete

42//+kubebuilder:rbac:groups=core,resources=services,verbs=get;list;watch;create;update;patch;delete

43

44// Reconcile handles the main logic for creating/updating resources based on the MyApp CR

45func (r *MyAppReconciler) Reconcile(ctx context.Context, req ctrl.Request) (ctrl.Result, error) {

46 log := log.FromContext(ctx)

47 // Fetch the MyApp resource

48 myapp := &applicationv1alpha1.MyApp{}

49 err := r.Get(ctx, req.NamespacedName, myapp)

50 if err != nil {

51 if apierrors.IsNotFound(err) {

52 log.Info("myapp resource not found. Ignoring since object must be deleted")

53 return ctrl.Result{}, nil

54 }

55 log.Error(err, "Failed to get myapp")

56 return ctrl.Result{}, err

57 }

58 // Initialize status conditions if none exist

59 if myapp.Status.Conditions == nil || len(myapp.Status.Conditions) == 0 {

60 meta.SetStatusCondition(&myapp.Status.Conditions, metav1.Condition{Type: typeAvailableMyApp, Status: metav1.ConditionUnknown, Reason: "Reconciling", Message: "Starting reconciliation"})

61 if err = r.Status().Update(ctx, myapp); err != nil {

62 log.Error(err, "Failed to update MyApp status")

63 return ctrl.Result{}, err

64 }

65

66 if err := r.Get(ctx, req.NamespacedName, myapp); err != nil {

67 log.Error(err, "Failed to re-fetch myapp")

68 return ctrl.Result{}, err

69 }

70 }

71 // Add finalizer for cleanup during deletion

72 if !controllerutil.ContainsFinalizer(myapp, myappFinalizer) {

73 log.Info("Adding Finalizer for MyApp")

74 if ok := controllerutil.AddFinalizer(myapp, myappFinalizer); !ok {

75 log.Error(err, "Failed to add finalizer into the custom resource")

76 return ctrl.Result{Requeue: true}, nil

77 }

78

79 if err = r.Update(ctx, myapp); err != nil {

80 log.Error(err, "Failed to update custom resource to add finalizer")

81 return ctrl.Result{}, err

82 }

83 }

84 // Handle cleanup if MyApp resource is marked for deletion

85 isMyAppMarkedToBeDeleted := myapp.GetDeletionTimestamp() != nil

86 if isMyAppMarkedToBeDeleted {

87 if controllerutil.ContainsFinalizer(myapp, myappFinalizer) {

88 log.Info("Performing Finalizer Operations for MyApp before delete CR")

89

90 meta.SetStatusCondition(&myapp.Status.Conditions, metav1.Condition{Type: typeDegradedMyApp,

91 Status: metav1.ConditionUnknown, Reason: "Finalizing",

92 Message: fmt.Sprintf("Performing finalizer operations for the custom resource: %s ", myapp.Name)})

93

94 if err := r.Status().Update(ctx, myapp); err != nil {

95 log.Error(err, "Failed to update MyApp status")

96 return ctrl.Result{}, err

97 }

98

99 r.doFinalizerOperationsForMyApp(myapp)

100

101 if err := r.Get(ctx, req.NamespacedName, myapp); err != nil {

102 log.Error(err, "Failed to re-fetch myapp")

103 return ctrl.Result{}, err

104 }

105

106 meta.SetStatusCondition(&myapp.Status.Conditions, metav1.Condition{Type: typeDegradedMyApp,

107 Status: metav1.ConditionTrue, Reason: "Finalizing",

108 Message: fmt.Sprintf("Finalizer operations for custom resource %s name were successfully accomplished", myapp.Name)})

109

110 if err := r.Status().Update(ctx, myapp); err != nil {

111 log.Error(err, "Failed to update MyApp status")

112 return ctrl.Result{}, err

113 }

114

115 log.Info("Removing Finalizer for MyApp after successfully perform the operations")

116 if ok := controllerutil.RemoveFinalizer(myapp, myappFinalizer); !ok {

117 log.Error(err, "Failed to remove finalizer for MyApp")

118 return ctrl.Result{Requeue: true}, nil

119 }

120

121 if err := r.Update(ctx, myapp); err != nil {

122 log.Error(err, "Failed to remove finalizer for MyApp")

123 return ctrl.Result{}, err

124 }

125 }

126 return ctrl.Result{}, nil

127 }

128 // List all Pods matching the MyApp resource

129 podList := &corev1.PodList{}

130 listOpts := []client.ListOption{

131 client.InNamespace(myapp.Namespace),

132 client.MatchingLabels(labelsForMyApp(myapp.Name)),

133 }

134 if err = r.List(ctx, podList, listOpts...); err != nil {

135 log.Error(err, "Failed to list pods", "MyApp.Namespace", myapp.Namespace, "MyApp.Name", myapp.Name)

136 return ctrl.Result{}, err

137 }

138 podNames := getPodNames(podList.Items)

139

140 if !equalSlices(podNames, myapp.Status.Nodes) {

141 myapp.Status.Nodes = podNames

142 if err := r.Status().Update(ctx, myapp); err != nil {

143 log.Error(err, "Failed to update MyApp status")

144 return ctrl.Result{}, err

145 }

146 }

147 // Create or update pods and services based on MyApp spec

148 for _, podSpec := range myapp.Spec.Pods {

149 foundPod := &corev1.Pod{}

150 err = r.Get(ctx, types.NamespacedName{Name: podSpec.Name, Namespace: myapp.Namespace}, foundPod)

151 if err != nil && apierrors.IsNotFound(err) {

152 pod := &corev1.Pod{

153 ObjectMeta: metav1.ObjectMeta{

154 Name: podSpec.Name,

155 Namespace: myapp.Namespace,

156 Labels: labelsForMyApp(myapp.Name),

157 },

158 Spec: corev1.PodSpec{

159 Containers: []corev1.Container{{

160 Name: podSpec.Name,

161 Image: podSpec.Image,

162 Command: podSpec.Command,

163 Args: podSpec.Args,

164 Ports: podSpec.ContainerPorts,

165 }},

166 },

167 }

168

169 if err := ctrl.SetControllerReference(myapp, pod, r.Scheme); err != nil {

170 log.Error(err, "Failed to set controller reference", "Pod.Namespace", pod.Namespace, "Pod.Name", pod.Name)

171 return ctrl.Result{}, err

172 }

173

174 log.Info("Creating a new Pod", "Pod.Namespace", pod.Namespace, "Pod.Name", pod.Name)

175 if err = r.Create(ctx, pod); err != nil {

176 log.Error(err, "Failed to create new Pod", "Pod.Namespace", pod.Namespace, "Pod.Name", pod.Name)

177 return ctrl.Result{}, err

178 }

179 // Refetch MyApp after creating the pod

180 if err := r.Get(ctx, req.NamespacedName, myapp); err != nil {

181 log.Error(err, "Failed to re-fetch myapp")

182 return ctrl.Result{}, err

183 }

184 // Update status to reflect successful pod creation

185 meta.SetStatusCondition(&myapp.Status.Conditions, metav1.Condition{Type: typeAvailableMyApp,

186 Status: metav1.ConditionTrue, Reason: "Reconciling",

187 Message: fmt.Sprintf("Pod %s for custom resource %s created successfully", pod.Name, myapp.Name)})

188

189 if err := r.Status().Update(ctx, myapp); err != nil {

190 log.Error(err, "Failed to update MyApp status")

191 return ctrl.Result{}, err

192 }

193

194 // Check if the pod exposes any ports and create Services

195 for _, containerPort := range podSpec.ContainerPorts {

196 serviceName := fmt.Sprintf("%s-service", podSpec.Name) // Set a fixed name for the Service

197 service := &corev1.Service{

198 ObjectMeta: metav1.ObjectMeta{

199 Name: serviceName,

200 Namespace: myapp.Namespace,

201 Labels: labelsForMyApp(myapp.Name),

202 },

203 Spec: corev1.ServiceSpec{

204 Selector: labelsForMyApp(myapp.Name),

205 Ports: []corev1.ServicePort{{

206 Name: containerPort.Name,

207 Port: containerPort.ContainerPort,

208 TargetPort: intstr.FromInt(int(containerPort.ContainerPort)),

209 }},

210 },

211 }

212

213 if err := ctrl.SetControllerReference(myapp, service, r.Scheme); err != nil {

214 log.Error(err, "Failed to set controller reference", "Service.Namespace", service.Namespace, "Service.Name", service.Name)

215 return ctrl.Result{}, err

216 }

217

218 log.Info("Creating a new Service", "Service.Namespace", service.Namespace, "Service.Name", service.Name)

219 if err = r.Create(ctx, service); err != nil {

220 log.Error(err, "Failed to create new Service", "Service.Namespace", service.Namespace, "Service.Name", service.Name)

221 return ctrl.Result{}, err

222 }

223 }

224 } else if err != nil {

225 log.Error(err, "Failed to get Pod")

226 return ctrl.Result{}, err

227 }

228 }

229

230 return ctrl.Result{}, nil

231}

232

233func (r *MyAppReconciler) doFinalizerOperationsForMyApp(cr *applicationv1alpha1.MyApp) {

234 // Add the cleanup steps that the finalizer should perform here

235 log := log.FromContext(context.Background())

236 log.Info("Successfully finalized custom resource")

237}

238

239func (r *MyAppReconciler) SetupWithManager(mgr ctrl.Manager) error {

240 return ctrl.NewControllerManagedBy(mgr).

241 For(&applicationv1alpha1.MyApp{}).

242 Owns(&corev1.Pod{}).

243 Owns(&corev1.Service{}). // Ensure the controller watches Services

244 Complete(r)

245}

246

247func labelsForMyApp(name string) map[string]string {

248 return map[string]string{"app": "myapp", "myapp_cr": name}

249}

250

251func getPodNames(pods []corev1.Pod) []string {

252 var podNames []string

253 for _, pod := range pods {

254 podNames = append(podNames, pod.Name)

255 }

256 return podNames

257}

258

259func equalSlices(a, b []string) bool {

260 if len(a) != len(b) {

261 return false

262 }

263 for i := range a {

264 if a[i] != b[i] {

265 return false

266 }

267 }

268 return true

269}

Modify myapp_controller_test.go

Link to heading

We edit myapp_controller_test.go and we add test cases for the reconciliation logic.

1package controller

2

3import (

4 "context"

5 "fmt"

6 "os"

7 "time"

8

9 //nolint:golint

10 . "github.com/onsi/ginkgo/v2"

11 . "github.com/onsi/gomega"

12 appsv1 "k8s.io/api/apps/v1"

13 corev1 "k8s.io/api/core/v1"

14 "k8s.io/apimachinery/pkg/api/errors"

15 metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

16 "k8s.io/apimachinery/pkg/types"

17 "sigs.k8s.io/controller-runtime/pkg/reconcile"

18

19 applicationv1alpha1 "nbfc.io/api/v1alpha1"

20)

21

22var _ = Describe("MyApp controller", func() {

23 Context("MyApp controller test", func() {

24

25 const MyAppName = "test-myapp"

26

27 ctx := context.Background()

28

29 namespace := &corev1.Namespace{

30 ObjectMeta: metav1.ObjectMeta{

31 Name: MyAppName,

32 Namespace: MyAppName,

33 },

34 }

35

36 typeNamespaceName := types.NamespacedName{

37 Name: MyAppName,

38 Namespace: MyAppName,

39 }

40 myapp := &applicationv1alpha1.MyApp{}

41

42 BeforeEach(func() {

43 By("Creating the Namespace to perform the tests")

44 err := k8sClient.Create(ctx, namespace)

45 Expect(err).To(Not(HaveOccurred()))

46

47 By("Setting the Image ENV VAR which stores the Operand image")

48 err = os.Setenv("MYAPP_IMAGE", "example.com/image:test")

49 Expect(err).To(Not(HaveOccurred()))

50

51 By("creating the custom resource for the Kind MyApp")

52 err = k8sClient.Get(ctx, typeNamespaceName, myapp)

53 if err != nil && errors.IsNotFound(err) {

54 // Let's mock our custom resource at the same way that we would

55 // apply on the cluster the manifest under config/samples

56 myapp := &applicationv1alpha1.MyApp{

57 ObjectMeta: metav1.ObjectMeta{

58 Name: MyAppName,

59 Namespace: namespace.Name,

60 },

61 Spec: applicationv1alpha1.MyAppSpec{

62 Size: 1,

63 ContainerPort: 22,

64 },

65 }

66

67 err = k8sClient.Create(ctx, myapp)

68 Expect(err).To(Not(HaveOccurred()))

69 }

70 })

71

72 AfterEach(func() {

73 By("removing the custom resource for the Kind MyApp")

74 found := &applicationv1alpha1.MyApp{}

75 err := k8sClient.Get(ctx, typeNamespaceName, found)

76 Expect(err).To(Not(HaveOccurred()))

77

78 Eventually(func() error {

79 return k8sClient.Delete(context.TODO(), found)

80 }, 2*time.Minute, time.Second).Should(Succeed())

81

82 // TODO(user): Attention if you improve this code by adding other context test you MUST

83 // be aware of the current delete namespace limitations.

84 // More info: https://book.kubebuilder.io/reference/envtest.html#testing-considerations

85 By("Deleting the Namespace to perform the tests")

86 _ = k8sClient.Delete(ctx, namespace)

87

88 By("Removing the Image ENV VAR which stores the Operand image")

89 _ = os.Unsetenv("MYAPP_IMAGE") // Remove ENV variable after tests

90 })

91

92 It("should successfully reconcile a custom resource for MyApp", func() {

93 By("Checking if the custom resource was successfully created")

94 Eventually(func() error {

95 found := &applicationv1alpha1.MyApp{}

96 return k8sClient.Get(ctx, typeNamespaceName, found)

97 }, time.Minute, time.Second).Should(Succeed())

98

99 By("Reconciling the custom resource created")

100 myappReconciler := &MyAppReconciler{

101 Client: k8sClient,

102 Scheme: k8sClient.Scheme(),

103 }

104

105 _, err := myappReconciler.Reconcile(ctx, reconcile.Request{

106 NamespacedName: typeNamespaceName,

107 })

108 Expect(err).To(Not(HaveOccurred()))

109

110 By("Checking if Deployment was successfully created in the reconciliation")

111 Eventually(func() error {

112 found := &appsv1.Deployment{}

113 return k8sClient.Get(ctx, typeNamespaceName, found)

114 }, time.Minute, time.Second).Should(Succeed())

115

116 By("Checking the latest Status Condition added to the MyApp instance")

117 Eventually(func() error {

118 if myapp.Status.Conditions != nil &&

119 len(myapp.Status.Conditions) != 0 {

120 latestStatusCondition := myapp.Status.Conditions[len(myapp.Status.Conditions)-1]

121 expectedLatestStatusCondition := metav1.Condition{

122 Type: typeAvailableMyApp,

123 Status: metav1.ConditionTrue,

124 Reason: "Reconciling",

125 Message: fmt.Sprintf(

126 "Deployment for custom resource (%s) with %d replicas created successfully",

127 myapp.Name,

128 myapp.Spec.Size),

129 }

130 if latestStatusCondition != expectedLatestStatusCondition {

131 return fmt.Errorf("The latest status condition added to the MyApp instance is not as expected")

132 }

133 }

134 return nil

135 }, time.Minute, time.Second).Should(Succeed())

136 })

137 })

138})

Modify application_v1alpha1_myapp.yaml

Link to heading

This YAML file defines the structure of our custom resource. Ensure it reflects the structure and default values we want for MyApp.

1apiVersion: application.nbfc.io/v1alpha1

2kind: MyApp

3metadata:

4 name: myapp-sample # Name of the MyApp resource instance

5spec:

6 size: 2

7# List of pods to be deployed as part of this MyApp resource

8 pods:

9 - name: pod2

10 image: ubuntu:latest

11 command: ["sleep"]

12 args: ["infinity"]

13 - name: pod1

14 image: nginx:latest

15 containerPorts:

16 - name: http

17 containerPort: 80

18 - name: pod3

19 image: debian:latest

20 command: ["sleep"]

21 args: ["infinity"]

Modify myapp_types.go

Link to heading

This file defines the schema and validation for our custom resource. Ensure it aligns with the desired specification and status definitions for MyApp.

1package v1alpha1

2

3import (

4 corev1 "k8s.io/api/core/v1"

5 metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

6)

7

8// MyAppSpec defines the desired state of MyApp

9type MyAppSpec struct {

10 Size int32 `json:"size,omitempty"`

11 ContainerPort int32 `json:"containerPort,omitempty"`

12 Pods []PodSpec `json:"pods,omitempty"`

13}

14

15// PodSpec defines the desired state of a Pod

16type PodSpec struct {

17 Name string `json:"name"`

18 Image string `json:"image"`

19 Command []string `json:"command,omitempty"`

20 Args []string `json:"args,omitempty"`

21 ContainerPorts []corev1.ContainerPort `json:"containerPorts,omitempty"`

22}

23

24// MyAppStatus defines the observed state of MyApp

25type MyAppStatus struct {

26 Conditions []metav1.Condition `json:"conditions,omitempty" patchStrategy:"merge" patchMergeKey:"type" protobuf:"bytes,1,rep,name=conditions"`

27 Nodes []string `json:"nodes,omitempty"`

28}

29

30//+kubebuilder:object:root=true

31//+kubebuilder:subresource:status

32

33// MyApp is the Schema for the myapps API

34type MyApp struct {

35 metav1.TypeMeta `json:",inline"`

36 metav1.ObjectMeta `json:"metadata,omitempty"`

37

38 Spec MyAppSpec `json:"spec,omitempty"`

39 Status MyAppStatus `json:"status,omitempty"`

40}

41

42//+kubebuilder:object:root=true

43

44// MyAppList contains a list of MyApp

45type MyAppList struct {

46 metav1.TypeMeta `json:",inline"`

47 metav1.ListMeta `json:"metadata,omitempty"`

48 Items []MyApp `json:"items"`

49}

50

51func init() {

52 SchemeBuilder.Register(&MyApp{}, &MyAppList{})

53}

After the changes, make sure we run the make command to update the generate files and apply the changes.

1make run

Deploy the application_v1alpha1_myapp.yaml file to our Kubernetes cluster:

1kubectl apply -f application_v1alpha1_myapp.yaml

Then we check if the deployments are running.

1kubectl get pods -A

Building Kubernetes controllers with KubeBuilder and using CRDs opens up a new level of flexibility and scalability in the Kubernetes platform. Through the process above, we were able to create a custom resource (MyApp) and deploy a controller that manages the lifecycle of that resource within the cluster.

Based on the above process, we built a simple Etherpad installation demo, available to test on killercoda. Stay tuned for more k8s-related posts and tricks!