From Containers to KServe and vAccel

Link to heading

We’ve built exactly that: a minimalist C client library for OCI registries, designed for embedding in systems software, unikernel runtimes, and edge-native inference frameworks. The inspiration was ModelPack, a recent CNCF sandbox project that establishes open standards for packaging, distributing and running AI artifacts in the cloud-native environment.

Why a C OCI Client? Link to heading

Most existing OCI tooling, such as skopeo, ORAS, or Docker, is written in Go or Python. While these tools are excellent for command-line and automation tasks, they’re heavy, dynamically linked, and not suitable for embedding into low-level runtimes or constrained environments.

When you want to pull container artifacts directly into a C codebase, say, a unikernel launcher, an inference runtime, or an edge orchestrator, your options are limited. You’d need to shell out to external tools or reimplement the OCI registry protocol from scratch.

That’s where our OCI Client Library comes in.

About the Project Link to heading

The library provides a clean, almost dependency-free API for interacting with OCI-compliant registries. It can:

- Retrieve manifests (including multi-architecture ones)

- Download layer blobs by digest

- Extract

.tar.gzlayers to a filesystem - Even handle fetch authentication tokens from registries (yes, even for public registry repos, you need an auth token!)

Under the hood, it uses libcurl, cJSON, and libarchive, but all those details are hidden. Applications link against a single, self-contained library and call a handful of (we hope) intuitive functions.

A Simpler API Link to heading

The library’s design philosophy is straightforward: fetching and unpacking container images should be as simple as fetching a file.

Here’s what using it looks like:

1oci_client_init();

2

3char *token = fetch_token("https://harbor.nbfc.io", "models/resnet101-v2.7"); // get a token

4char *manifest = fetch_manifest("https://harbor.nbfc.io", // registry

5 "models/resnet101-v2.7", // repo

6 "tvm", // tag

7 "amd64", // arch

8 "linux", // os

9 token); // auth

10

11struct OciLayer *layers;

12int n = oci_manifest_parse_layers(manifest, &layers); // helper function to parse the layers

13

14// Get the layers one by one, and extract them

15for (int i = 0; i < n; i++) {

16 struct Memory *blob = fetch_blob("https://harbor.nbfc.io",

17 "models/resnet101-v2.7",

18 layers[i].digest,

19 token,

20 NULL);

21 extract_tar_gz(layers[i].filename, "output");

22}

23

24oci_layers_free(layers, n); // cleanup

25oci_client_cleanup(); // cleanup

That’s all it takes to pull and extract an OCI image layer-by-layer in native C code.

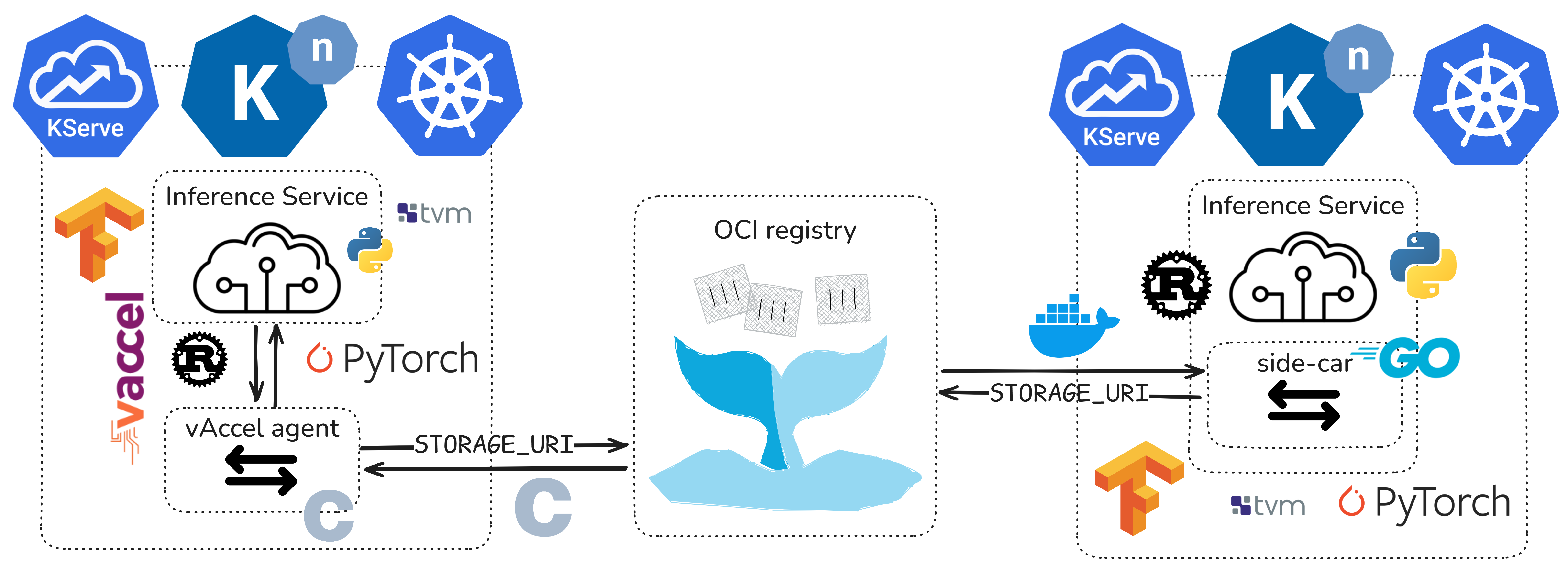

Integration with KServe and vAccel

Link to heading

This library isn’t just for experiments, it’s becoming a key part of our

cloud-native acceleration stack. Specifically, in

vAccel we refactored the vAccel Resource handling

to allow:

- local files (as before),

- remote files (from URIs), to fetch models/TVM shared objects etc.

- multiple files (either archives or compressed archives), to account for TF saved models

The plan is to add an extra, OCI option, to vAccel, to facilitate software

delivery of models to vAccel instances (agents or applications).

This functionality, will enable efficient model fetching in KServe deployments

that use vAccel. Thus, instead of relying on KServe’s STORAGE_URI and

side-car containers to fetch the models and make them available to the

inference service container, we just specify the OCI URI

(oci://harbor.nbfc.io/models/resnet101-v2.7:tvm) and the binary artifact is

available to vAccel as a Resource, ready to be loaded by the relevant

plugin/backend.

Additionally, to leverage KServe’s simplified workflow, we could patch the code

to allow for a custom side-car that just fetches the model like that, without

relying on heavy-weight containers in Python/Go. This way, we make deployments

faster, more portable, and suitable for edge devices with limited resources.

Why store models in OCI Registries ? Link to heading

Storing models as OCI artifacts transforms them into first-class, verifiable, and portable software units, aligning ML deployment with modern DevOps and GitOps practices. Using OCI registries to store ML models provides several important benefits:

- Immutability: Once pushed, layers and manifests are immutable. This ensures that models cannot be tampered with after release.

- Verification & Trust: Tools like

cosignallow signing and verifying models, ensuring integrity and origin. - Provenance: Registry manifests track digests, timestamps, and annotations, making it easy to track model versions.

- Compatibility: OCI is an open standard, widely supported across cloud providers, edge runtimes, and orchestration systems.

- Layered Storage & Reuse: Common dependencies can be shared across models, reducing storage and bandwidth usage. Tooling such as runtime dependencies (eg the TVM runtime, alongside the shared object, or labels, alongside a ResNet torchscript model).

Building and Running Link to heading

To build the library, you need Meson and Ninja:

1sudo apt install build-essential meson ninja-build libcurl4-openssl-dev libcjson-dev libarchive-dev

2meson setup builddir -Dlibrary_type=shared

3meson compile -C builddir

Then try the included demo program:

1./builddir/oci_client_demo \

2 -r https://harbor.nbfc.io \

3 -R models/resnet101-v2.7 \

4 -t tvm -a amd64 -o linux -d output

It will pull and extract a TVM-enabled shared object for ResNet101 under output/.

Towards OCI-Native Acceleration Link to heading

With this library, we’re filling a missing gap in the OCI ecosystem: a native, embeddable, dependency-minimal C client for fetching container artifacts.

We’re excited about what this unlocks for edge computing and AI model lifecycle management.

Get Involved Link to heading

The code is open source, licensed under Apache-2.0, and available on GitHub.

Contributions, feedback, and integrations are welcome!