As per a well-known and established definition:

“Continuous Integration (CI) is the practice of merging all developers’ working copies to a shared mainline several times a day.”

Continuous Integration practice comes along with the automated testing and verification of the integrated code changes, giving testing automations the status as one of the pivotal elements in software development. The automation of testing can range from a few simple scripts, to complex setups, employing an entire testing suite. However, challenges escalate significantly when deploying software across diverse architectures (such as amd64, ARM, etc.) and varied environments (including containers, Kubernetes, bare-metal, etc.). The emergence of Kubernetes and its extensive software ecosystem, coupled with the robust tooling provided by Github, has introduced an intriguing perspective on automating the building and testing phases of software development.

As a company specializing in systems software, we’ve had to innovate to ensure that our code not only avoids regressions but also remains compatible with multiple architectures, facilitating deployment in a range of environments. This talk provides insights into our findings, outlining the use of an open-source Kubernetes operator built to scale self-hosted Github runners. We share the intricacies of all customisations necessary to align this solution with our specific requirements.

Specifically, we go through a brief introduction of the operator, and dig in the customization of the runners, built to support various combinations of system-specific requirements: interact with VM middlewares (libvirt), enable hardware accelerator access (GPUs), setup a standalone k8s environment, and finally, automate the building of the runners themselves.

To wrap up, we showcase a workflow that we use to release vAccel, a software framework we build, that consists of various individual components, that eventually interact with each other.

Github Actions Link to heading

Github Actions is the Software Development Life Cycle (SDLC) automation platform introduced by Github. With Github Actions framework, one can create automation workflows as part of the actual software code already stored in Github.

Github workflows are represented in YAML syntax and it are executed on configured runners.

The basic elements of Github Actions framework are:

| Github Actions element | Description |

|---|---|

| Event Triggers | starting signals for a Github action to start: operation from a Github repo, manual schedule, external event |

| Workflow | workflow is a script consisting of jobs, steps, calling actions or other workflows forming a pipeline |

| Job | jobs is an execution block consisting of steps that run on a specific runner |

| Step | atomic script code blocks of jobs |

| Action | script module consisting of steps and invoked by workflow pipelines |

| Environment | building & deployment targets of github workflow pipelines |

| Runner | VMs, containers or pods where workflow code is executed |

Github Marketplace is another feature of Github Actions: a public place in Github where a vast number of Github Actions are published and available to use.

As a systems software company, our deliverables have different software forms: from simple libraries, to executable binaries and whole containerised images. To accommodate the various building, deployment and testing necessities, we have been implementing several Github workflow pipelines with several customised features:

- multi-arch build & unit-test of vAccel components, in self-hosted custom built runners

- multi-arch build & test of vAccel, in self-hosted custom built runners

- SDLC supporting pipelines for automating repeating tasks

To fulfill the various requirements of system-specific combinations, the following Github workflow components were used:

- bare metal k8s cluster

- evryfs k8s operator

- custom built runner images

- Github option for self-hosted runners

The following sections of this post describe these custom made components in details.

Self-hosted Runners Link to heading

Generally speaking, self hosted github runners provide extensive control of hardware, operating system containers with hardware-specific components. We take advantage of these components’ features to build, execute and maintain a Continuous Integration/Continuous Deployment set of pipelines for the Nubificus multiple hardware architectures solution.

Let us list some of these Self-hosted runners advantages:

- launching pods in architectures not currently supported by Github hosted runners

- images with hardware specific customisations

- management & maintenance by hosting organization

- implementation in both virtual or physical infrastructure

- maximize volumes & concurrent jobs

- cost efficiency

- persistence, not being ephemeral as Github hosted runners

To get the maximum out of the Github self-hosted runners capabilities and at the same time enforce the restriction of a multi-arch pipeline ecosystem, we opted to build an elastic kubernetes bare metal environment where self-hosted custom image pod containers would be the beating heart of it.

evryfs operator & runners Link to heading

Kubernetes Operators are software extensions that make use of custom resources to manage applications and their components. Operators are very useful because they are application-specific controllers that extend the functionality of the Kubernetes APIs and manage complex instances of the application for the user.

For the purposes of scheduling github actions runner pods in our self-hosted runners kubernetes environment and minimize manual actions, we have chosen evryfs/github-actions-runner-operator (evryfs/garo). Evryfs/garo suits our needs for controlling multi-arch autoscaling self-hosted runners for our Github Actions CI/CD ecosystem. Nonetheless, other available operators will be evaluated as part of our future iterations.

Several re-configurations were necessary in Github for self-hosted runners to get registered, custom labels were introduced for each runner image, and several issues had to get addressed (ex. autoscaling). A Jinja2 script templating mechanism was also introduced to help automate these custom evryfs GithubActionRunner operator definitions.

1apiVersion: garo.tietoevry.com/v1alpha1

2kind: GithubActionRunner

3metadata:

4 name: {{ name }}

5 namespace: actions-runner

6spec:

7 minRunners: {{ min_runners }}

8 maxRunners: {{ max_runners }}

9 organization: {{ organization }}

10 reconciliationPeriod: 1m

11 podTemplateSpec:

12 metadata:

13 labels:

14 runner: {{ runner_label }}

15 spec:

16 containers:

17 - name: runner

18 env:

19 - name: DOCKER_TLS_CERTDIR

20 value: /certs

21 - name: DOCKER_HOST

22 value: tcp://localhost:2376

23 - name: DOCKER_TLS_VERIFY

24 value: "1"

25 - name: DOCKER_CERT_PATH

26 value: /certs/client

27 - name: GH_ORG

28 value: {{ gh_org_value }}

29 - name: ACTIONS_RUNNER_INPUT_LABELS

30 value: {{ runner_input_labels_value }}

31 envFrom:

32 - secretRef:

33 name: {{ secret_ref_name }}

34 image: {{ runner_image }}

35 imagePullPolicy: Always

36 resources: {}

37 - name: docker

38[snipped]

39 env:

40 - name: DOCKER_TLS_CERTDIR

41 value: /certs

42 image: docker:stable-dind

43 imagePullPolicy: Always

44 args:

45 - --mtu=1430

46 resources: {}

47 securityContext:

48 privileged: true

49 volumeMounts:

50 - mountPath: /var/lib/docker

51 name: docker-storage

52 - mountPath: /certs

53 name: docker-certs

54 - mountPath: /home/runner/_work

55 name: runner-work

56 - name: exporter

57 image: {{ exporter_image }}

58 ports:

59 - containerPort: 3903

60 protocol: TCP

61[snipped]

62 nodeSelector:

63 {{ node_selector_value }}: "true"

Build custom runner images Link to heading

To build/execute in multiple architectures with specific hardware configurations, we had to instruct github action self-hosted runner operator to spawn runners that use our custom purpose-build docker images stored in our private registry.

To overcome conflicts and incompatibilities between specific configurations and library/runtime prerequisites and at the same time reduce build time and optimize the Docker images in terms of volume, we had to establish various lineages of custom Github inter-dependent Dockerfile declarations.

A base Dockerfile (“Dockerfile.base”) as the “core” of the custom Dockerfile declarations (derived itself from ubuntu:20.04) which is used as the basic layer upon which most other customer docker images are build.

This inter-dependent layered dockerfile image structuring in conjunction with the automated runner images build process (described below), significantly reduces delivery times for new custom images necessary for organization’s deployment of vaccel framework.

Automate the process of building the runner images Link to heading

To automate the build and publish process of the custom purpose-built docker images for our github action runners, a Github Action pipeline is implemented. The pipeline checks out the Dockerfile image files and related scripts, along with declarative json file that depicts the custom docker images layering dependencies.

The Github Action pipeline decomposes the declarative json file, constructs at runtime the relevant build and registry publishing jobs, executes them in the correct order, preserving the Dockerfile layered dependencies. The image artifacts build from ’lower’ build level jobs are available as registry images for the ‘higher’ build level jobs.

1{

2 "dockerfile_build_components":

3 [

4 {

5 "image_filename": "Dockerfile.gcc-lite",

6 "tags": "lite",

7 "architecture": ["x86_64", "aarch64","armv7l"],

8 "build_level": 0

9 },

10 {

11 "image_filename": "Dockerfile.rust",

12 "base_filename" : "Dockerfile.gcc-lite",

13 "tags": "lite",

14 "architecture": ["x86_64", "aarch64","armv7l"],

15 "build_level": 1

16 }

17 ],

18 "output_manifest_tag": "final"

19}

Generate descriptors for all runners Link to heading

runner-ymls is a Python-based tool designed to streamline the deployment of

self-hosted GitHub Actions runners on Kubernetes clusters. Leveraging the

flexibility of Jinja templates, this tool empowers users to easily customize

essential parameters such as runner names, types, container images, custom

resources, and tags. With a focus on simplifying the deployment process, it

dynamically generates Kubernetes YAML descriptors tailored to specific

requirements.

An example configuration is the following:

1{

2 "cloudkernels-go": {

3 "name": "cloudkernels-go",

4 "min_runners": 3,

5 "max_runners": 12,

6 "runner_label": "cloudkernels-go",

7 "runner_value": "runner",

8 "gh_org_value": "cloudkernels",

9 "runner_input_labels_value": "go",

10 "secret_ref_name": "cloudkernels-go-regtoken",

11 "runner_image": "harbor.nbfc.io/nubificus/gh-actions-runner-go:final",

12 "exporter_image": "harbor.nbfc.io/nubificus/github-actions-runner-metrics:generic",

13 "node_selector_value": "cloudkernels/runners",

14 "runner_exclude_arch": "arm",

15 "cpureq": "200m",

16 "cpulim": "4000m",

17 "memreq": "1024Mi",

18 "memlim": "8192Mi"

19 },

20...

21}

Some interesting points are the following:

- we define resource requests and limits, to make sure we don’t starve the bare-metal nodes where these runners are scheduled.

- we customize the container images for the runner and the exporter – the Docker in Docker part of the runner deployment is used as is.

- we have logic to exclude specific architectures (eg ARM, as for instance go is not supported on aarch32).

- we can define min/max values for the number of runners

Essentially, via the template shown above and this configuration file, we can create YAML deployment files dynamically, and apply them to our CI cluster. As a result, we are presented with a convenient and efficient solution for adapting CI/CD processes to diverse application needs, enhancing overall workflow control and efficiency.

Example CI pipelines Link to heading

In this section, 2 CI pipeline examples will be presented:

- custom build of runner container images pipeline

- vaccel release pipeline

Example pipeline 1: build of GH runner container images Link to heading

The purpose of this pipeline is to automate the building, tagging and registry uploading of multi-arch containers images used by our custom self-hosted github runners.

As stated in previous section, we took advantage of docker containers layering functionality and devised a hierarchy tree for our container images. This way we save valuable build time, volume and at the same time satisfy constraints about installation dependencies and conflicts.

This Dockerfile lineage is depicted in a json file with build_levels, and base Dockerfiles for higher build_levels, Github runner labels to designate execution environments and output tags to mark the manufactured images.

1{

2 "dockerfile_build_components":

3 [

4 {

5 "image_filename": "Dockerfile.gcc-lite",

6 "tags": "lite",

7 "architecture": ["x86_64", "aarch64","armv7l"],

8 "build_level": 0

9 },

10 {

11 "image_filename": "Dockerfile.rust",

12 "base_filename" : "Dockerfile.gcc-lite",

13 "tags": "lite",

14 "architecture": ["x86_64", "aarch64","armv7l"],

15 "build_level": 1

16 }

17 ],

18 "output_manifest_tag": "final"

19}

Dockerfiles have been modified accordingly:

Dockerfile sample1: gcc-lite base image, derived from the uploaded generic image

1FROM harbor.nbfc.io/nubificus/gh-actions-runner-base:generic

2

3ENV DEBIAN_FRONTEND=noninteractive

4

5SHELL ["/bin/bash", "-o", "pipefail", "-c"]

6

7# Install base packages.

8USER root

9RUN apt update && TZ=Etc/UTC \

10 apt-get install -y --no-install-recommends \

11 gcc \

12 g++ \

13 curl && \

14 apt-get -y clean && \

15 rm -rf /var/cache/apt /var/lib/apt/lists/* /tmp/* /var/tmp/*

16

17# Install build-essential and update cmake

18RUN apt-get update && \

19 apt-get install -y --no-install-recommends software-properties-common && \

20 add-apt-repository -y ppa:ubuntu-toolchain-r/test && \

21 apt-get update && \

22 apt-get install -y --no-install-recommends gcc-10 g++-10 && \

23 update-alternatives --install /usr/bin/gcc gcc /usr/bin/gcc-10 100 --slave /usr/bin/g++ g++ /usr/bin/g++-10 && \

24 apt-get install -y --no-install-recommends build-essential cmake protobuf-compiler protobuf-c-compiler libclang-dev && \

25 apt-get -y clean && \

26 rm -rf /var/cache/apt /var/lib/apt/lists/* /tmp/* /var/tmp/*

27

28USER runner

29ENTRYPOINT ["/entrypoint.sh"]

Dockerfile sample2: Rust dockerfile -just adds rust installation in the base image created for level0

1FROM nubificus_base_build

2

3USER root

4

5# Install rust using rustup

6ENV RUSTUP_HOME=/opt/rust CARGO_HOME=/opt/cargo PATH=/opt/cargo/bin:$PATH

7RUN wget --https-only --secure-protocol=TLSv1_2 -O- https://sh.rustup.rs | sh /dev/stdin -y

8RUN chmod a+w /opt/cargo

9RUN chmod a+w /opt/rust

10

11USER runner

12ENTRYPOINT ["/entrypoint.sh"]

Individual multi-arch images build are tagged and uploaded to Organization private registry as parts of a manifest list. This way a transparent mechanism is created to distribute from our private registry the correct architecture image per tag.

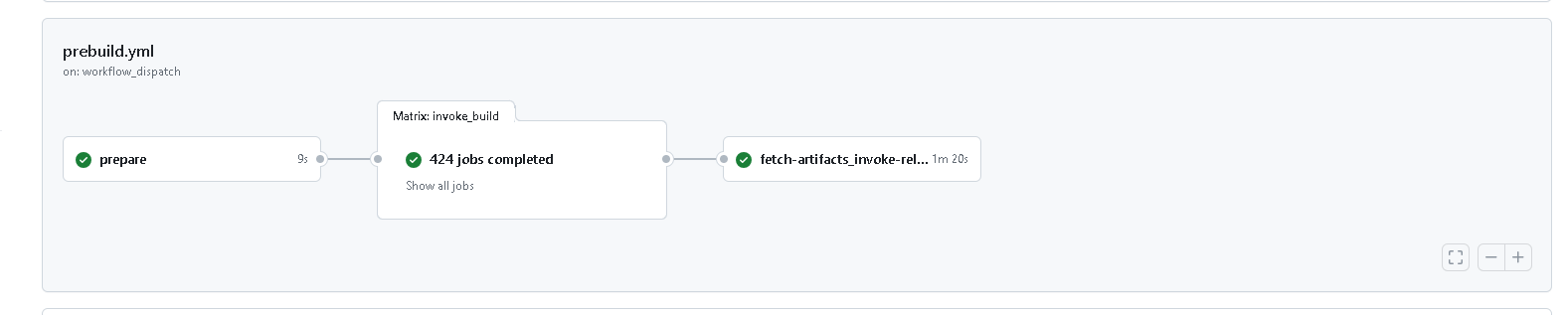

Figure 3 presents a sample execution of the pipeline described in our example:

- gcc-lite docker image is build for 3 architectures and uploaded under a specific tag

- rust docker image is then build, based on the previous step gcc-lite tagged image

- setup and cleanup jobs in the beginning and at the end of the pipeline

Example pipeline 2: vAccel framework build and release pipeline Link to heading

The purpose of this pipeline is to build a release of vAccel framework. In general, vAccel framework consists of three main set of components: the frontend driver, the backend driver and the runtime components.

The main characteristics of the Vaccel framework building pipeline are:

- Configurability: Build & release parameters and build elements details are all described in the manifest.json

- Modularity: Reusable workflow modules based either on the artifact registry type (ex. s3) from where artifacts are collected or components source code builders before incorporated in the vaccel framework package.

- Dependency orchestration: Dependent component build stages are fulfilled according to the ascending build level values declared for each component in the manifest.json file

- Runtime variables ephemeral storage: To accommodate runtime information exchange, we devised a mechanism based on GitHub Actions variables REST API.

- Multi-arch build outputs:

Configurability Link to heading

To ensure that necessary configurations for build&release are stored & maintained in a single location, a declarative manifest json file is used as a single point of truth. It depicts all vAccel framework components details: build level dependencies, release-related information, workflow execution parameters etc.

Find below a sample manifest.json file

1{

2 "version": "v0.6.0",

3 "package_registry": "https://s3.nbfc.io/",

4 "package_registry_location": "nbfc-assets/vaccel-release",

5 "architecture": ["x86_64", "aarch64"],

6 "build_type": ["Debug", "Release"],

7 "dry_run": "false",

8

9 "build": {

10 "repo_dependencies": [

11 {

12 "type": "repository",

13 "name": "vaccelrt",

14 "package_path": "artifacts/",

15 "registry": "https://github.com/cloudkernels/vaccelrt.git",

16 "artifact_registry_path": "nbfc-assets/vaccel-release",

17 "branch": "main",

18 "build_level": "0"

19 },

20 {

21 "type": "repository",

22 "name": "vaccelrt-torch",

23 "package_path": "artifacts/opt/share/",

24 "registry": "https://github.com/nubificus/vaccelrt-plugin-torch.git",

25 "artifact_registry_path": "nbfc-assets/vaccel-release",

26 "branch": "main",

27 "runner_tags": ["torch"],

28 "build_level": "1"

29 },

30 {

31 "type": "repository",

32 "name": "vaccelrt-jetson",

33 "package_path": "artifacts/opt/share/",

34 "registry": "https://github.com/nubificus/vaccelrt-plugin-jetson.git",

35 "artifact_registry_path": "nbfc-assets/vaccel-release",

36 "branch": "main",

37 "runner_tags": ["jetson"],

38 "build_level": "1"

39 },

40 {

41 "type": "repository",

42 "name": "vaccelrt-vsock",

43 "package_path": "artifacts/opt/lib/",

44 "registry": "https://github.com/nubificus/vaccelrt-plugin-vsock.git",

45 "artifact_registry_path": "nbfc-assets/vaccel-release",

46 "branch": "master",

47 "runner_tags": ["rust"],

48 "build_level": "1"

49 },

50 {

51 "type": "repository",

52 "name": "vaccelrt-agent",

53 "package_path": "artifacts/opt/bin/",

54 "registry": "https://github.com/nubificus/vaccelrt-agent.git",

55 "artifact_registry_path": "nbfc-assets/vaccel-release",

56 "branch": "main",

57 "runner_tags": ["rust"],

58 "build_level": "1"

59 },

60 {

61 "type": "repository",

62 "name": "vaccelrt-tensorflow",

63 "package_path": "artifacts/opt/share/",

64 "registry": "https://github.com/nubificus/vaccelrt-plugin-tensorflow.git",

65 "artifact_registry_path": "nbfc-assets/vaccel-release",

66 "branch": "main",

67 "runner_tags": ["tf"],

68 "build_level": "1"

69 },

70 {

71 "type": "repository",

72 "name": "vaccelrt-pynq",

73 "package_path": "artifacts/opt/lib/",

74 "registry": "https://github.com/nubificus/vaccelrt-plugin-pynq.git",

75 "artifact_registry_path": "nbfc-assets/vaccel-release",

76 "branch": "main",

77 "runner_tags": ["gcc"],

78 "build_level": "1"

79 }

80 ],

81 "artifact_dependencies": []

82 }

83}

Modularity Link to heading

vAccel framework is comprised of three main component sets (frontend driver, the backend driver and the runtime components) so we decided that a modular design suits best for our vAccel framework build & release pipeline.

A multi-level set of reusable workflows was implemented based on the following high level abstract design:

preprocessor.yml --- processor.yml (top level)

|

------- level1_artifacts-collect.yml

| |

| ---- level2_artifact_a.yaml

| ---- level2_artifact_b.yaml

|

------- level1_components-build.yml

|

---- level2-component-build_a.yaml

---- level2-component-build_b.yaml

---- level2-component-build_c.yaml

This modular design enables to add new runtime components with minimum development modifications in existing workflow code, as well as ensures that these modifications are isolated from code building other components reducing significantly the risk of regressions.

Dependency orchestration Link to heading

To successfully build vAccel framework, several build dependencies between components must be met. To get along with these build dependencies, we devised a multi-stage building process. Building dependencies are declared per build target inside the manifest.json using the “build_level” field. Up to now 2 building levels are implemented: build_level “0” and build_level “1” -more could be added, if necessary.

Building of components with lower build_level precede building of components with higher build_level: higher build levels components are build only after lower level builds have completed. This way higher level components can use dependency artifacts built in previous workflow steps to build successfully.

Dependencies are calculated in the initial processor workflow steps: a strategy matrix is produced for each build level with the respective components only and two dependent build jobs are then invoked for each strategy matrix created.

Each component is build according to its own callable workflow build file and building is executed in specific self-hosted github runners as per manifest component “runner_tag” information. Then components are uploaded to the location designated inside the component section of the manifest.json file, ready to be used at the later stage. Building information is stored using a runtime ephemeral mechanism based on GitHub Actions variables REST API.

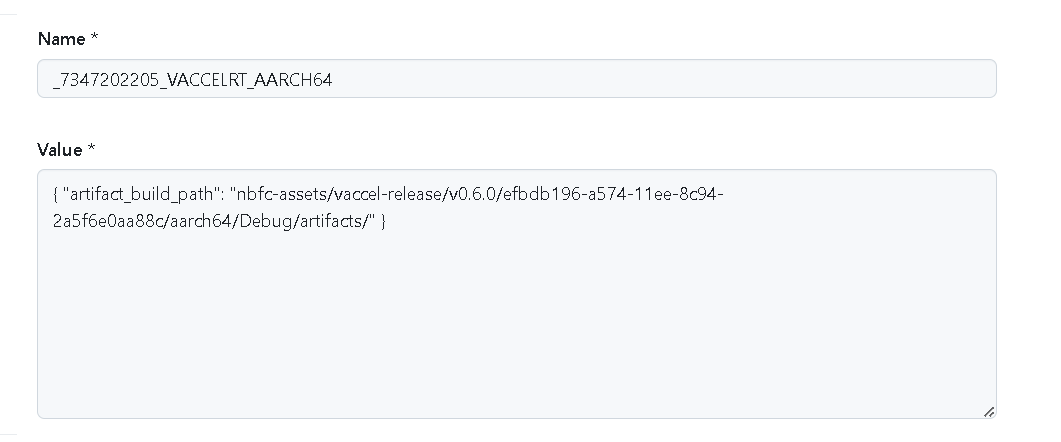

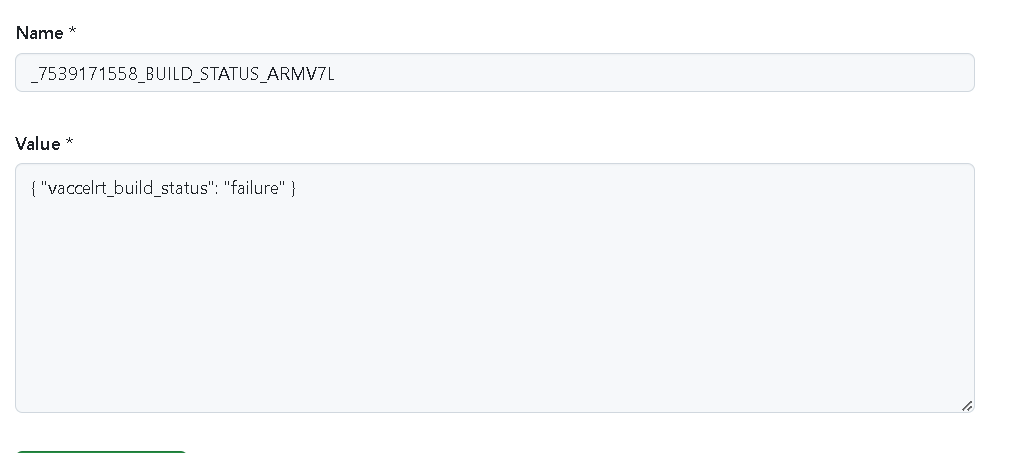

Runtime variables ephemeral storage Link to heading

As already mentioned, in vAccel framework there exist various components with building dependencies to other components. In order to make components with lower build level (build previously in the same github workflow execution) available to higher build level components, we had to overcome a github actions constraint: there is no way -up to now- to exchange information between dynamically spawned github action jobs or between a static job and dependent dynamic github action jobs.

To overcome this constraint, we devised a mechanism that takes advantage of the GitHub Actions variables REST API: each job creates repository variables that store runtime information: remote registry path where the build output was uploaded, the build step status etc. Variables are created using a unique template naming convention: it is a combination of the component name, target architecture and github unique github action run_id. This way, jobs executed at a later stage of the building execution can access specific variables and their information. To store multiple sections of information in a single variable, the value of the repository github variable created is a meaningful json structure. This way value can be easily read, updated or manipulated.

Figures 4 and 5 show the relevant examples.

Multi-arch build outputs Link to heading

vAccel is a multi-arch framework: it supports x86_64, aarch64 & armv7l architectures. To build vAccel framework for multiple architectures builds have to run on specific spawned Github self-hosted runners with the same architecture. As presented in the previous section of this post, a mechanism was improvised that builds, tags and uploads multi-arch docker images under a single docker label to our private registry.

Self-hosted runners with custom build and tagged images are spawned in our kubernetes ecosystem so that components can build using these runners.

vAccel framework build and release pipeline sets the same “runs-on” policies for the component build jobs: this way jobs are executed only on spawned runners matching the set of the requested tags.

Packaging and release Link to heading

After component building is completed successfully, the finalizing steps of the vAccel framework packaging takes place: components are downloaded from their previously uploaded locations, release type is calculated & released vAccel framework package is uploaded.

Release type is calculated (SNAPSHOT, RC, Release) according to the triggering github event details:

| Github Actions trigger | vAccel Framework Release Type |

|---|---|

| Pull requests to main branch | RC (Release Candidate) |

| Push trigger to main branch | Release |

| All other cases (ex. manual execution) | SNAPSHOT |

Figure 6 captures the high level execution diagram of a sample execution of the pipeline described in our example:

- Preparatory actions

- 2-level building of components

- fetching built components & final packaging vAccel framework release