The Serverless computing paradigm has revolutionized the way applications are deployed. In a serverless architecture, developers can focus solely on writing code without the need to provision or manage servers.

Knative is such a Serverless-Framework, providing a set of essential building blocks (on top of Kubernetes) for developers to simplify the deployment and management of cloud-services and workloads. The user simply containerizes the function (FaaS) to be deployed, managed in yaml-format as a Kubernetes-object predefined by Knative’s CRDs (Knative.Service).

As every K8s deployment, execution of the workload takes place in the node selected by the Scheduler (core component responsible for making decisions about where to run containers or pods within a cluster of nodes).

At a lower level, execution of the workload inside the node is handled by a container-manager (Docker, containerd) and the “low-level” runtimes (runc, gVisor, Kata-containers) that are pre-configured. But on the other hand, why use a ’non-traditional’ low-level runtime? What is the purpose of such an act?

Well, to answer this we need to fully understand certain aspects of containers:

“Containers are packages of software that contain all of the necessary elements to run in any environment. In this way, containers virtualize the operating system and run anywhere from a private data center to the public cloud” …

Developers running and deploying their code in servers often led to “it works on my machine” type of problems in the past (e.g. misconfigured dependencies). Utilization of containerization technologies provides a consistent and isolated environment for applications, ensuring that software runs reliably across platforms.

But wait … isn’t this why we used VMs in the first place?

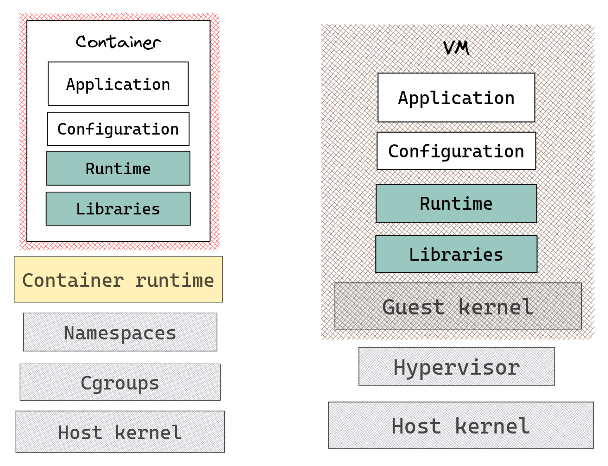

One might make the case that this is reminiscent of the original rationale for employing virtual machines (VMs) prior to the advent of containerization technologies. Despite the fact that containers and virtual machines offer analogous resource isolation and allocation advantages (as explained here), their primary distinguishing factor, from an architectural perspective, lies in the location of the abstraction layer, which can be seen in the graph below.

Figure 1: Containers vs VMs Architecture

Containers are “an abstraction at the app layer”. Several containers can operate on a single system, utilizing the same OS kernel and running as separate processes within the user space, effectively isolated from one another. On the other hand, the architecture diagram of VMs implies “an abstraction of physical hardware”. The hypervisor enables the simultaneous operation of multiple virtual machines (VMs) on a single system. Each VM encompasses a complete instance of an operating system along with the requisite applications, binaries, and libraries.

While Containers offer the potential for workload isolation through the utilization of cgroups and namespaces, the underlying host’s operating system remains susceptible to risks. On the other hand VMs have their own kernel and do not directly interact with the host system, thus providing an additional layer of security between the Host and the workload.

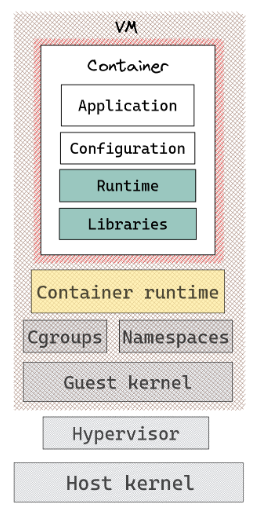

What if we could get the best of both worlds … the security of VMs plus the “lightweight” nature of containers ?

Figure 2: Micro-VM’s Architecture

“Micro-VMs provide enhanced security and workload isolation over traditional VMs, while enabling the speed and resource efficiency of containers”.

Utilizing and configuring ’low-level’ runtimes like Kata-containers and gVisor empowers the generation of Micro-VMs from the container manager, along with the various tools using it, including Kubernetes and Knative.

In this article we explore how to enable the deployment of workloads sandboxed in Micro-VMs, across various runtimes via Knative.

Prerequisites : Link to heading

- Kubernetes Cluster >= v1.25

NOTE: If you have only one node in your cluster, you need at least 6 CPUs, 6 GB of memory, and 30 GB of disk storage. If you have multiple nodes in your cluster, for each node you need at least 2 CPUs, 4 GB of memory, and 20 GB of disk storage.

More info on how we set-up our K8s cluster with Knative can be found here

Knative-Configuration Link to heading

To configure the placement of Knative Services on designated nodes and specify the runtime for execution, you can achieve this by editing the configuration as follows:

kubectl -n knative-serving edit cm config-features

1 kubernetes.podspec-affinity: enabled

2 kubernetes.podspec-runtimeclassname: enabled

NOTE: Add the lines under the

datasection, not the_example

Runc deployment Link to heading

We use a simple helloworld server as Knative-Service to deploy the containerized function:

1apiVersion: serving.knative.dev/v1

2kind: Service

3metadata:

4 name: helloworld

5 namespace: ktest

6spec:

7 template:

8 metadata: null

9 spec:

10 containerConcurrency: 10

11 containers:

12 - env:

13 - name: TARGET

14 value: Go Sample v1

15 image: harbor.nbfc.io/kimage/hello-world@sha256:4554e30f8380ad74003fafe0f7136dc7511a3d437da2bfdc55fb4f913d641951

NOTE: To further configure the placement of the function in specific nodes on the k8s cluster, we could add an affinity section in the template spec of the container function. See the snippet below etc.

1spec:

2 template:

3 metadata: null

4 spec:

5 affinity:

6 nodeAffinity:

7 requiredDuringSchedulingIgnoredDuringExecution:

8 nodeSelectorTerms:

9 - matchExpressions:

10 - key: kubernetes.io/hostname

11 operator: In

12 values:

13 - $NODE_NAME

14 containerConcurrency: 10

15 containers:

16 - env:

17 - name: TARGET

18 value: Go Sample v1

19 image: harbor.nbfc.io/kimage/hello-world@sha256:4554e30f8380ad74003fafe0f7136dc7511a3d437da2bfdc55fb4f913d641951

In order to test the deployment of the helloworld-Knative-Service, you can use the curl

command. To do this, we need to retrieve IP of kourier-internal service.

1$ kubectl apply -f ksvc-hello-world.yaml

2Warning: Kubernetes default value is insecure, Knative may default this to secure in a future release: spec.template.spec.containers[0].securityContext.allowPrivilegeEscalation, spec.template.spec.containers[0].securityContext.capabilities, spec.template.spec.containers[0].securityContext.runAsNonRoot, spec.template.spec.containers[0].securityContext.seccompProfile

3service.serving.knative.dev/helloworld created

4$ kubectl get ksvc -n ktest

5NAME URL LATESTCREATED LATESTREADY READY REASON

6helloworld http://helloworld.ktest.svc.cluster.local helloworld-00001 helloworld-00001 True

7$ kubectl get svc -A | grep kourier-internal

8kourier-system kourier-internal ClusterIP 10.98.240.177 <none> 80/TCP,443/TCP 2d2h

9ktest helloworld ExternalName <none> kourier-internal.kourier-system.svc.cluster.local 80/TCP 61s

10$ curl -H "Host: helloworld.ktest.svc.cluster.local" http://10.98.240.177

11Hello ! The IP is : 10.80.157.91

NOTE: By default the “low-level” runtime

containerdutilizes is runc thus we don’t need to specify aRuntimeClasson K8s or re-configure the containerd’s configuration file on the deployment node

gVisor Deployment Link to heading

gVisor is an open-source container runtime that enhances

the security and isolation of containerized applications. To enable the deployment of

workloads to gVisor-runtime first we need to install the gVisor-handler binaries and

then define the gvisor-RuntimeClass in Kubernetes.

Thus to run pods with gVisor-runtime we need to

install runsc and containerd-shim-runsc-v1 on deployment-node.

1(

2 set -e

3 ARCH=$(uname -m)

4 URL=https://storage.googleapis.com/gvisor/releases/release/latest/${ARCH}

5 wget ${URL}/runsc ${URL}/runsc.sha512 \

6 ${URL}/containerd-shim-runsc-v1 ${URL}/containerd-shim-runsc-v1.sha512

7 sha512sum -c runsc.sha512 \

8 -c containerd-shim-runsc-v1.sha512

9 rm -f *.sha512

10 chmod a+rx runsc containerd-shim-runsc-v1

11 sudo mv runsc containerd-shim-runsc-v1 /usr/local/bin

12)

Then we add the following lines to the containerd’s config(/etc/containerd/config.toml)

1[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runsc]

2 runtime_type = "io.containerd.runsc.v1"

NOTE: We modify the containerd of the node that will run the deployment.

Then restart the containerd service and reload the systemd configuration

1sudo systemctl restart containerd

2sudo systemctl daemon-reload

Now let’s create the gVisor-RuntimeClass:

1cat <<EOF | kubectl apply -f -

2apiVersion: node.k8s.io/v1

3kind: RuntimeClass

4metadata:

5 name: gvisor

6handler: runsc

7EOF

NOTE: The

RuntimeClassKubernetes object is a resource that defines the container runtime to Pod’s containers.

By applying the following Knative-Service yaml, we deploy

our function(a simple hello-world server) via the

gVisor runtime.

1cat <<EOF | tee gvisor-ksvc-hello-world.yaml | kubectl apply -f -

2

3apiVersion: serving.knative.dev/v1

4kind: Service

5metadata:

6 name: gvisor-helloworld-0

7 namespace: ktest

8spec:

9 template:

10 spec:

11 containerConcurrency: 10

12 containers:

13 - env:

14 - name: TARGET

15 value: Go Sample v1

16 image: harbor.nbfc.io/kimage/hello-world@sha256:4554e30f8380ad74003fafe0f7136dc7511a3d437da2bfdc55fb4f913d641951

17 runtimeClassName: gvisor

18EOF

We can test the deployment in the same way we did with runc :

1$ kubectl apply -f gvisor-ksvc-hello-world.yaml

2Warning: Kubernetes default value is insecure, Knative may default this to secure in a future release: spec.template.spec.containers[0].securityContext.allowPrivilegeEscalation, spec.template.spec.containers[0].securityContext.capabilities, spec.template.spec.containers[0].securityContext.runAsNonRoot, spec.template.spec.containers[0].securityContext.seccompProfile

3service.serving.knative.dev/kata-fc-helloworld-0 created

4$ kubectl get ksvc -n ktest

5NAME URL LATESTCREATED LATESTREADY READY REASON

6gvisor-helloworld-0 http://gvisor-helloworld-0.ktest.svc.cluster.local gvisor-helloworld-0-00001 gvisor-helloworld-0-00001 True

7$ kubectl get svc -A | grep kourier-internal

8kourier-system kourier-internal ClusterIP 10.98.240.177 <none> 80/TCP,443/TCP 2d4h

9ktest gvisor-helloworld-0 ExternalName <none> kourier-internal.kourier-system.svc.cluster.local 80/TCP 37s

10$ curl -H "Host: gvisor-helloworld-0.ktest.svc.cluster.local" http://10.98.240.177

11Hello ! The IP is : 10.80.157.94

But how do we know the deployment actually runs via gVisor on the specified node ?

In order to validate everything is set up correctly

we retrieve logs from the handling-processes.

ps aux | grep gvisor | grep ktest

1$ ps aux | grep gvisor | grep ktest

2root 534472 0.1 0.4 1261980 18488 ? Ssl 14:21 0:00 runsc-gofer --log=/run/containerd/io.containerd.runtime.v2.task/k8s.io/1e5fea157377c9b9d94c81e2921ef35712d5abdf08d3b3c19b138f40b0aacb20/log.json --log-format=json --panic-log=/var/log/pods/ktest_gvisor-helloworld-0-00001-deployment-6896594586-5s4tv_625b38a0-8355-4d44-9d2a-843d898d2c2a/gvisor_panic.log --root=/run/containerd/runsc/k8s.io --log-fd=3 gofer --bundle=/run/containerd/io.containerd.runtime.v2.task/k8s.io/1e5fea157377c9b9d94c81e2921ef35712d5abdf08d3b3c19b138f40b0aacb20 --io-fds=6,7 --mounts-fd=5 --overlay-mediums=0,0 --spec-fd=4 --sync-nvproxy-fd=-1 --sync-userns-fd=-1 --proc-mount-sync-fd=14 --apply-caps=false --setup-root=false

3root 534476 2.0 0.8 2411120 35288 ? Ssl 14:21 0:00 runsc-sandbox --root=/run/containerd/runsc/k8s.io --log=/run/containerd/io.containerd.runtime.v2.task/k8s.io/1e5fea157377c9b9d94c81e2921ef35712d5abdf08d3b3c19b138f40b0aacb20/log.json --log-format=json --panic-log=/var/log/pods/ktest_gvisor-helloworld-0-00001-deployment-6896594586-5s4tv_625b38a0-8355-4d44-9d2a-843d898d2c2a/gvisor_panic.log --log-fd=3 --panic-log-fd=4 boot --apply-caps=false --bundle=/run/containerd/io.containerd.runtime.v2.task/k8s.io/1e5fea157377c9b9d94c81e2921ef35712d5abdf08d3b3c19b138f40b0aacb20 --controller-fd=10 --cpu-num=2 --io-fds=5,6 --mounts-fd=7 --overlay-mediums=0,0 --setup-root=false --spec-fd=11 --start-sync-fd=8 --stdio-fds=12,13,14 --total-host-memory=4110331904 --total-memory=4110331904 --user-log-fd=9 --product-name=Standard PC (Q35 + ICH9, 2009) --proc-mount-sync-fd=22 1e5fea157377c9b9d94c81e2921ef35712d5abdf08d3b3c19b138f40b0aacb20

4root 534526 0.0 0.4 1261980 17800 ? Sl 14:21 0:00 runsc --root=/run/containerd/runsc/k8s.io --log=/run/containerd/io.containerd.runtime.v2.task/k8s.io/1e5fea157377c9b9d94c81e2921ef35712d5abdf08d3b3c19b138f40b0aacb20/log.json --log-format=json --panic-log=/var/log/pods/ktest_gvisor-helloworld-0-00001-deployment-6896594586-5s4tv_625b38a0-8355-4d44-9d2a-843d898d2c2a/gvisor_panic.log wait 1e5fea157377c9b9d94c81e2921ef35712d5abdf08d3b3c19b138f40b0aacb20

Kata-containers Link to heading

Another option for enhancing the security and isolation of

containerized applications is Kata-containers-containers. Utilizing virtualization mechanisms

provides an additional layer of isolation, mitigating potential security

vulnerabilities.

The virtual machines created to execute the workload may vary depending on the chosen hypervisor.

Such Hypervisors

can be : QEMU,Firecracker,DragonBall,Cloud-hypervisor

In the following section we explore these options.

Kata-QEMU Link to heading

For enabling the Kata-runtime we need to

install first kata-shim(containerd-shim-kata-v2) and kata-runtime.

Thus we follow this installation guide

1wget https://github.com/kata-containers/kata-containers/releases/download/3.2.0-alpha4/kata-static-3.2.0-alpha4-amd64.tar.xz

2

3tar xvf kata-static-3.2.0-alpha4-amd64.tar.xz ./

4sudo cp -r opt/kata/ /opt/

5echo 'PATH="$PATH:/opt/kata/bin"' >> ~/.profile

6source .profile

7echo $PATH

8

9kata-runtime --version

1$ kata-runtime --version

2kata-runtime : 3.2.0-alpha3

3 commit : 61a8eabf8e4c3a0ff807f7461b27a1a036ef816e

4 OCI specs: 1.0.2-dev

Now we need to make kata-runtime use the QEMU hypervisor,

following the installation-guide

let’s create a script indicating the usage of QEMU hypervisor from kata-runtime, in /usr/local/bin/containerd-shim-kata-qemu-v2

add the following lines.

1#!/bin/bash

2KATA_CONF_FILE=/opt/kata/share/defaults/kata-containers/configuration-qemu.toml /opt/kata/bin/containerd-shim-kata-v2 $@

Make it executable.

1sudo chmod +x /usr/local/bin/containerd-shim-kata-qemu-v2

It’s time to tell containerd about the kata-qemu container-runtime, so we define

the runtime by adding these lines in /etc/containerd/config.toml

1 [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-qemu]

2 privileged_without_host_devices = false

3 privileged_without_host_devices_all_devices_allowed = false

4 runtime_type = "io.containerd.kata-qemu.v2"

5 pod_annotations = ["*"]

6 container_annotations = ["*"]

Restart the containerd service and reload the systemd

configuration

1sudo systemctl restart containerd.service

2sudo systemctl daemon-reload

To verify the containerd configuration, we execute a basic command within a container, specifying the kata-qemu runtime.

1sudo ctr run --runtime io.containerd.kata-qemu.v2 --rm docker.io/library/ubuntu:latest test uname -a

2## Linux localhost 6.1.38 #1 SMP Tue Jul 25 16:32:12 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

Now we know containerd is configured properly is time to define

theKata-QEMU RuntimeClass in Kubernetes.

1cat << EOF | kubectl apply -f -

2kind: RuntimeClass

3apiVersion: node.k8s.io/v1

4metadata:

5 name: kata-qemu

6handler: kata-qemu

7EOF

Next we create another deployment of helloworld-Knative-service

but this time the RuntimeClass points

to the kata-qemu handler

1cat <<EOF | tee kata-qemu-ksvc-hello-world.yaml | kubectl apply -f -

2apiVersion: serving.knative.dev/v1

3kind: Service

4metadata:

5 name: kata-qemu-helloworld-0

6 namespace: ktest

7spec:

8 template:

9 metadata: null

10 spec:

11 containerConcurrency: 10

12 containers:

13 - env:

14 - name: TARGET

15 value: Go Sample v1

16 image: harbor.nbfc.io/kimage/hello-world@sha256:4554e30f8380ad74003fafe0f7136dc7511a3d437da2bfdc55fb4f913d641951

17 runtimeClassName: kata-qemu

18EOF

The following figure shows the deployment and response of the function:

1$ kubectl create ns ktest

2namespace/ktest created

3$ kubectl apply -f kata-qemu-ksvc-hello-world.yaml

4Warning: Kubernetes default value is insecure, Knative may default this to secure in a future release: spec.template.spec.containers[0].securityContext.allowPrivilegeEscalation, spec.template.spec.containers[0].securityContext.capabilities, spec.template.spec.containers[0].securityContext.runAsNonRoot, spec.template.spec.containers[0].securityContext.seccompProfile

5service.serving.knative.dev/kata-qemu-helloworld-00 created

6$ kubectl get ksvc -n ktest

7NAME URL LATESTCREATED LATESTREADY READY REASON

8kata-qemu-helloworld-00 http://kata-qemu-helloworld-00.ktest.svc.cluster.local kata-qemu-helloworld-00-00001 kata-qemu-helloworld-00-00001 True

9$ kubectl get svc -A | grep kourier-internal

10kourier-system kourier-internal ClusterIP 10.98.240.177 <none> 80/TCP,443/TCP 2d4h

11ktest kata-qemu-helloworld-00 ExternalName <none> kourier-internal.kourier-system.svc.cluster.local 80/TCP 103s

12$ curl -H "Host: kata-qemu-helloworld-00.ktest.svc.cluster.local" http://10.98.240.177

13Hello ! The IP is : 10.80.157.99

Kata-Firecracker Link to heading

The same process is followed for utilization of

kata-runtime with Firecracker hypervisor configured.

One fundamental distinction in this context is the necessity to incorporate the devmapper snapshotter

to manage virtual block devices required by the Firecracker hypervisor.

Make sure you follow the instructions to setup

it correctly in containerd.

To check that devmapper is registered and running:

1sudo dmsetup ls

2# containerd-pool (253:0)

3sudo ctr plugins ls | grep devmapper

4# io.containerd.snapshotter.v1 devmapper linux/amd64 ok

Seems like devmapper is configured properly !

Now we have configure the containerd-pool

let’s create a script indicating the usage of Firecracker configuration for kata-runtime,

Add the following lines in /usr/local/bin/containerd-shim-kata-fc-v2 :

1#!/bin/bash

2KATA_CONF_FILE=/opt/kata/share/defaults/kata-containers/configuration-fc.toml /opt/kata/bin/containerd-shim-kata-v2 $@

1sudo chmod +x /usr/local/bin/containerd-shim-fc-qemu-v2

Then define the kata-fc runtime in containerd:

1 [plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-fc]

2 privileged_without_host_devices = true

3 privileged_without_host_devices_all_devices_allowed = false

4 runtime_type = "io.containerd.kata-fc.v2"

5 snapshotter = "devmapper"

6 pod_annotations = ["*"]

7 container_annotations = ["*"]

NOTE: We create the

containerd-pooland modify the containerd-configuration of the node that will run the deployment.

Restart the containerd service and reload the systemd

configuration

1sudo systemctl restart containerd.service

2sudo systemctl daemon-reload

To test the configuration of kata-fc in containerd, run a simple container with the specified runtime:

1 sudo ctr run --snapshotter devmapper --runtime io.containerd.run.kata-fc.v2 -t --rm docker.io/library/ubuntu:latest ubuntu-kata-fc-test uname -a

2# Linux localhost 6.1.38 #1 SMP Tue Jul 25 16:32:12 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

It appears that everything has been configured correctly!

We now need to define the RuntimeClass

in Kubernetes to enable the utilization of the kata-fc

runtime through containerd on the deployment nodes.

1cat << EOF | kubectl apply -f -

2kind: RuntimeClass

3apiVersion: node.k8s.io/v1

4metadata:

5 name: kata-fc

6handler: kata-fc

7EOF

Create the Knative-Service to be deployed with kata-fc RuntimeClass

1cat <<EOF | tee kata-fc-ksvc-hello-world.yaml | kubectl apply -f -

2

3apiVersion: serving.knative.dev/v1

4kind: Service

5metadata:

6 name: kata-fc-helloworld-0

7 namespace: ktest

8spec:

9 template:

10 spec:

11 containerConcurrency: 10

12 containers:

13 - env:

14 - name: TARGET

15 value: Go Sample v1

16 image: harbor.nbfc.io/kimage/hello-world@sha256:4554e30f8380ad74003fafe0f7136dc7511a3d437da2bfdc55fb4f913d641951

17 runtimeClassName: kata-fc

18EOF

After a succesfull deployment we can curl the Knative-Service (through kourier-internal svc) and check its

response.

1$ kubectl apply -f kata-fc-ksvc-hello-world.yaml

2Warning: Kubernetes default value is insecure, Knative may default this to secure in a future release: spec.template.spec.containers[0].securityContext.allowPrivilegeEscalation, spec.template.spec.containers[0].securityContext.capabilities, spec.template.spec.containers[0].securityContext.runAsNonRoot, spec.template.spec.containers[0].securityContext.seccompProfile

3service.serving.knative.dev/kata-fc-helloworld-00 created

4$ kubectl get ksvc -n ktest

5NAME URL LATESTCREATED LATESTREADY READY REASON

6kata-fc-helloworld-00 http://kata-fc-helloworld-00.ktest.svc.cluster.local kata-fc-helloworld-00-00001 kata-fc-helloworld-00-00001 True

7$ kubectl get svc -A | grep kourier-internal

8kourier-system kourier-internal ClusterIP 10.98.240.177 <none> 80/TCP,443/TCP 2d5h

9ktest kata-fc-helloworld-00 ExternalName <none> kourier-internal.kourier-system.svc.cluster.local 80/TCP 31s

10$ curl -H "Host: kata-fc-helloworld-00.ktest.svc.cluster.local" http://10.98.240.177

11Hello ! The IP is : 10.80.157.100

Kata-Dragonball Link to heading

Another hypervisor option, enhancing the low-CPU utilization and low-memory overhead

is Dragonball. In the same manner

we create a script pointing to the dragonball-configuration this time. In /usr/local/bin/containerd-shim-kata-rs-v2

add the following lines:

1#!/bin/bash

2KATA_CONF_FILE=/opt/kata/share/defaults/kata-containers/configuration-dragonball.toml /opt/kata/runtime-rs/bin/containerd-shim-kata-v2 $@

Make it executable.

1sudo chmod +x /usr/local/bin/containerd-shim-kata-rs-v2

Add the following lines in containerd’s config(/etc/containerd/config.toml) to

enable the utilization of Dragonball hypervisor

1[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.kata-rs]

2 privileged_without_host_devices = true

3 privileged_without_host_devices_all_devices_allowed = true

4 runtime_type = "io.containerd.kata-rs.v2"

5 #snapshotter = "devmapper"

6 pod_annotations = ["*"]

7 container_annotations = ["*"]

Then restart the containerd service and reload the systemd configuration

1sudo systemctl restart containerd.service

2sudo systemctl daemon-reload

Now let’s test everything is set up correctly :

1sudo ctr run --runtime io.containerd.kata-rs.v2 --rm docker.io/library/ubuntu:latest test-rs uname -a

2## Linux localhost 6.1.38 #1 SMP Tue Jul 25 16:32:12 UTC 2023 x86_64 x86_64 x86_64 GNU/Linux

Seems everything works as expected!

In order to make the Dragonball-runtime option available, we need to define a new RuntimeClass

utilizing the kata-rs handler of the containerds deployment-node. Thus execute

the following:

1cat << EOF | kubectl apply -f -

2kind: RuntimeClass

3apiVersion: node.k8s.io/v1

4metadata:

5 name: kata-rs

6handler: kata-rs

7EOF

Apply the helloworld-Knative-Service with the Dragonball runtime

1cat <<EOF | tee kata-rs-ksvc-hello-world.yaml | kubectl apply -f -

2apiVersion: serving.knative.dev/v1

3kind: Service

4metadata:

5 name: kata-rs-helloworld-0

6 namespace: ktest

7spec:

8 template:

9 spec:

10 containerConcurrency: 10

11 containers:

12 - env:

13 - name: TARGET

14 value: Go Sample v1

15 image: harbor.nbfc.io/kimage/hello-world@sha256:4554e30f8380ad74003fafe0f7136dc7511a3d437da2bfdc55fb4f913d641951

16 runtimeClassName: kata-rs

17EOF

To test the FaaS-deployed service, execute the curl command as illustrated in the figure below:

1$ kubectl apply -f kata-rs-ksvc-hello-world.yaml

2Warning: Kubernetes default value is insecure, Knative may default this to secure in a future release: spec.template.spec.containers[0].securityContext.allowPrivilegeEscalation, spec.template.spec.containers[0].securityContext.capabilities, spec.template.spec.containers[0].securityContext.runAsNonRoot, spec.template.spec.containers[0].securityContext.seccompProfile

3$service.serving.knative.dev/kata-rs-helloworld-0 created

4$ kubectl get ksvc -n ktest

5NAME URL LATESTCREATED LATESTREADY READY REASON

6kata-rs-helloworld-0 http://kata-rs-helloworld-0.ktest.svc.cluster.local kata-rs-helloworld-0-00001 kata-rs-helloworld-0-00001 True

7$ kubectl get svc -A | grep kourier-internal

8kourier-system kourier-internal ClusterIP 10.98.240.177 <none> 80/TCP,443/TCP 2d5h

9ktest kata-rs-helloworld-0 ExternalName <none> kourier-internal.kourier-system.svc.cluster.local 80/TCP 97s

10$ curl -H "Host: kata-rs-helloworld-0.ktest.svc.cluster.local" http://10.98.240.177

11Hello ! The IP is : 10.80.157.102

That’s all!