Serverless computing is a revolutionary approach to cloud computing that allows developers to focus solely on writing and deploying code without having to manage server infrastructure.In a traditional server-based model, developers need to consider and manage the servers that will run their applications.However, with serverless computing, developers can deploy their code as self-contained functions or services that are automatically run and scaled.This enables them to build and deploy applications quickly, without worrying about the underlying infrastructure.Several frameworks have emerged to facilitate serverless computing, including AWS Lambda, Google Cloud Functions, and Microsoft Azure Functions. One notable framework is Knative, which builds upon the concepts of serverless computing and provides additional features and functionalities.In the following blog, we will delve deeper into Knative.

Knative is an open source platform built on top of Kubernetes to facilitate the deployment, scaling, and management of serverless workloads.It was initially released in the open-source world by Google in 2014 and has since been contributed to by over 50 companies including IBM, Red Hat and SAP.

Knative aims to simplify Kubernetes adoption for serverless computing.It provides best practices to streamline deploying container-based serverless applications on Kubernetes clusters.This enables developers to focus on writing code without worrying about infrastructure concerns like auto-scaling, routing, monitoring etc.

Knative serves as a Kubernetes extension by providing tools and abstractions that make it easier to deploy and manage containerized workloads natively on Kubernetes.Over the years, usage of containers in software development has increased rapidly.Knative enhances the developer experience on Kubernetes by packaging code into containers and managing their execution.

Knative has three main components that provide the core functionality for deploying and running serverless workloads on Kubernetes:

1. Build : Link to heading

The Knative Build component automates the process of converting source code into containers.The build component can convert publicly accessible source codes into containers.In this mode, Knative is not only flexible, but can be configured to meet specific requirements.It also supports various build strategies and can automatically rebuild and update images when the source code changes.

2. Serving : Link to heading

This component focuses on the development and scaling of stateless applications known as “services”. Knative Serving utilizes a set of Kubernets called Custom Resource Definitions (CRDs).These resources are used to define and control how the serverless workload behaves in the cluster.

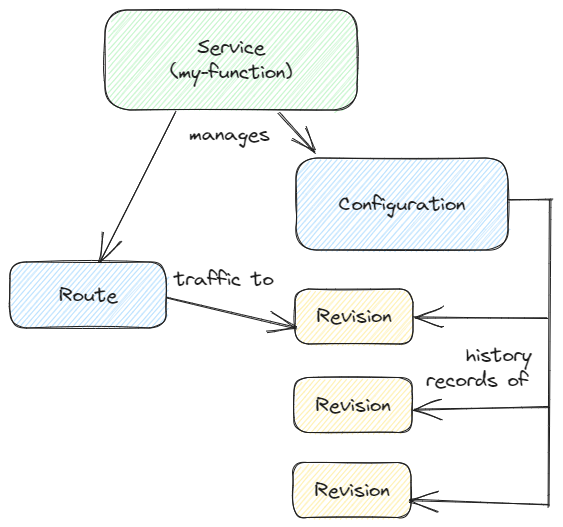

Figure 1: The primary Knative Serving resources are Services, Routes, Configurations, and Revisions

The main resources of Knative Serving are Services, Routes, Configurations, and Revisions:

• Services: Autonomously manages the entire lifecycle of the workload. Controls the creation of other objects to ensure that the application has a Route,a Configuration,and a Revision .

• Routes: Corresponds to an endpoint of the network or to several revisions.

• Configurations: Maintains the desired state for the application.Modifying the configuration creates a new revision.

• Revisions: It is a snapshot (at a specific moment) of the code and Configurations for each modification made to the workload.Revisions are immutable objects and can be persisted as long as they are useful.

3. Eventing : Link to heading

Eventing describes the functionality of Knative that allows it to define the behavior of a container based on specific events. That is, different events can trigger specific container-based operations.

Now let’s see how to install knative in a k8s cluster!

Installing Knative Serving Link to heading

Prerequisites

- We need to install kubectl.

- A Kubernetes cluster is required for the installation.(we tested this on Kubernetes v1.24.)

- For the Kubernetes cluster, ensure it has access to the internet.

Let’s start !

First install brew to your system.

- Run the update commands

1sudo apt update

1sudo apt-get install build-essential

- Install Git

1sudo apt install git -y

- Run Homebrew installation script

1/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

- Add Homebrew to your PATH

1(echo; echo 'eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"') >> /home/$USER/.bashrc

2eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

- Check system for potential problems

1brew doctor

Verifying image signatures

For cosign:

1brew install cosign

and check the version with command:

cosign version

Output:

1ubuntuVM:~$ cosign version

2 ______ ______ _______. __ _______ .__ __.

3 / | / __ \ / || | / _____|| \ | |

4| ,----'| | | | | (----`| | | | __ | \| |

5| | | | | | \ \ | | | | |_ | | . ` |

6| `----.| `--' | .----) | | | | |__| | | |\ |

7 \______| \______/ |_______/ |__| \______| |__| \__|

8cosign: A tool for Container Signing, Verification and Storage in an OCI registry.

9

10GitVersion: 2.2.0

11GitCommit: 546f1c5b91ef58d6b034a402d0211d980184a0e5

12GitTreeState: "clean"

13BuildDate: 2023-08-31T18:52:52Z

14GoVersion: go1.21.0

15Compiler: gc

16Platform: linux/amd64

For jq:

1sudo apt-get install jq -y

and check the version with:

jq --version

Output:

1ubuntuVM:~$ jq --version

2jq-1.6

- Extract the images from a manifeset and verify the signatures.

1curl -sSL https://github.com/knative/serving/releases/download/knative-v1.10.1/serving-core.yaml \

2 | grep 'gcr.io/' | awk '{print $2}' | sort | uniq \

3 | xargs -n 1 \

4 cosign verify -o text \

5 --certificate-identity=signer@knative-releases.iam.gserviceaccount.com \

6 --certificate-oidc-issuer=https://accounts.google.com

Output:

1Verification for gcr.io/knative-releases/knative.dev/serving/cmd/webhook@sha256:ce5f0144cf58b25fcf4027f69b7a0d616c7b72e7ff4e2a133a04a2b3c35fd7da --

2The following checks were performed on each of these signatures:

3 - The cosign claims were validated

4 - Existence of the claims in the transparency log was verified offline

5 - The code-signing certificate was verified using trusted certificate authority certificates

6Certificate subject: signer@knative-releases.iam.gserviceaccount.com

7Certificate issuer URL: https://accounts.google.com

8{"critical":{"identity":{"docker-reference":"gcr.io/knative-releases/knative.dev/serving/cmd/webhook"},"image":{"docker-manifest-digest":"sha256:ce5f0144cf58b25fcf4027f69b7a0d616c7b72e7ff4e2a133a04a2b3c35fd7da"},"type":"cosign container image signature"},"optional":null}

Install the Knative Serving component Link to heading

- Install the required custom resources by running the command:

1kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.10.2/serving-crds.yaml

Output:

1ubuntu@ubuntuVM:~$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.10.2/serving-crds.yaml

2customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev created

3customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev created

4customresourcedefinition.apiextensions.k8s.io/clusterdomainclaims.networking.internal.knative.dev created

5customresourcedefinition.apiextensions.k8s.io/domainmappings.serving.knative.dev created

6customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev created

7customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev created

8customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev created

9customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev created

10customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev created

11customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev created

12customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev created

13customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev created

14ubuntu@ubuntuVM:~$

- Install the core components of Knative Serving

1kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.10.2/serving-core.yaml

Output:

1ubuntuVM:~$ kubectl apply -f https://github.com/knative/serving/releases/download/knative-v1.10.2/serving-core.yaml

2namespace/knative-serving created

3clusterrole.rbac.authorization.k8s.io/knative-serving-aggregated-addressable-resolver created

4clusterrole.rbac.authorization.k8s.io/knative-serving-addressable-resolver created

5clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-admin created

6clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-edit created

7clusterrole.rbac.authorization.k8s.io/knative-serving-namespaced-view created

8clusterrole.rbac.authorization.k8s.io/knative-serving-core created

9clusterrole.rbac.authorization.k8s.io/knative-serving-podspecable-binding created

10serviceaccount/controller created

11clusterrole.rbac.authorization.k8s.io/knative-serving-admin created

12clusterrolebinding.rbac.authorization.k8s.io/knative-serving-controller-admin created

13clusterrolebinding.rbac.authorization.k8s.io/knative-serving-controller-addressable-resolver created

14customresourcedefinition.apiextensions.k8s.io/images.caching.internal.knative.dev unchanged

15customresourcedefinition.apiextensions.k8s.io/certificates.networking.internal.knative.dev unchanged

16customresourcedefinition.apiextensions.k8s.io/configurations.serving.knative.dev unchanged

17customresourcedefinition.apiextensions.k8s.io/clusterdomainclaims.networking.internal.knative.dev unchanged

18customresourcedefinition.apiextensions.k8s.io/domainmappings.serving.knative.dev unchanged

19customresourcedefinition.apiextensions.k8s.io/ingresses.networking.internal.knative.dev unchanged

20customresourcedefinition.apiextensions.k8s.io/metrics.autoscaling.internal.knative.dev unchanged

21customresourcedefinition.apiextensions.k8s.io/podautoscalers.autoscaling.internal.knative.dev unchanged

22customresourcedefinition.apiextensions.k8s.io/revisions.serving.knative.dev unchanged

23customresourcedefinition.apiextensions.k8s.io/routes.serving.knative.dev unchanged

24customresourcedefinition.apiextensions.k8s.io/serverlessservices.networking.internal.knative.dev unchanged

25customresourcedefinition.apiextensions.k8s.io/services.serving.knative.dev unchanged

26secret/serving-certs-ctrl-ca created

27secret/knative-serving-certs created

28secret/control-serving-certs created

29secret/routing-serving-certs created

30image.caching.internal.knative.dev/queue-proxy created

31configmap/config-autoscaler created

32configmap/config-defaults created

33configmap/config-deployment created

34configmap/config-domain created

35configmap/config-features created

36configmap/config-gc created

37configmap/config-leader-election created

38configmap/config-logging created

39configmap/config-network created

40configmap/config-observability created

41configmap/config-tracing created

42horizontalpodautoscaler.autoscaling/activator created

43poddisruptionbudget.policy/activator-pdb created

44deployment.apps/activator created

45service/activator-service created

46deployment.apps/autoscaler created

47service/autoscaler created

48deployment.apps/controller created

49service/controller created

50deployment.apps/domain-mapping created

51deployment.apps/domainmapping-webhook created

52service/domainmapping-webhook created

53horizontalpodautoscaler.autoscaling/webhook created

54poddisruptionbudget.policy/webhook-pdb created

55deployment.apps/webhook created

56service/webhook created

57validatingwebhookconfiguration.admissionregistration.k8s.io/config.webhook.serving.knative.dev created

58mutatingwebhookconfiguration.admissionregistration.k8s.io/webhook.serving.knative.dev created

59mutatingwebhookconfiguration.admissionregistration.k8s.io/webhook.domainmapping.serving.knative.dev created

60secret/domainmapping-webhook-certs created

61validatingwebhookconfiguration.admissionregistration.k8s.io/validation.webhook.domainmapping.serving.knative.dev created

62validatingwebhookconfiguration.admissionregistration.k8s.io/validation.webhook.serving.knative.dev created

63secret/webhook-certs created

64ubuntu@ubuntuVM:~$

Install a networking layer Link to heading

The following instructions are for installing a networking layer. (Install Kourier and enable its Knative integration)

- Install the Knative Kourier controller:

1kubectl apply -f https://github.com/knative/net-kourier/releases/download/knative-v1.10.0/kourier.yaml

Output:

1ubuntuVM:~$ kubectl apply -f https://github.com/knative/net-kourier/releases/download/knative-v1.10.0/kourier.yaml

2namespace/kourier-system created

3configmap/kourier-bootstrap created

4configmap/config-kourier created

5serviceaccount/net-kourier created

6clusterrole.rbac.authorization.k8s.io/net-kourier created

7clusterrolebinding.rbac.authorization.k8s.io/net-kourier created

8deployment.apps/net-kourier-controller created

9service/net-kourier-controller created

10deployment.apps/3scale-kourier-gateway created

11service/kourier created

12service/kourier-internal created

13horizontalpodautoscaler.autoscaling/3scale-kourier-gateway created

14poddisruptionbudget.policy/3scale-kourier-gateway-pdb created

15ubuntu@ubuntuVM:~$

- Configure Knative Serving to use Kourier by default:

1kubectl patch configmap/config-network \

2 --namespace knative-serving \

3 --type merge \

4 --patch '{"data":{"ingress-class":"kourier.ingress.networking.knative.dev"}}'

Output:

1ubuntuVM:~$ kubectl patch configmap/config-network \

2 --namespace knative-serving \

3 --type merge \

4 --patch '{"data":{"ingress-class":"kourier.ingress.networking.knative.dev"}}'

5configmap/config-network patched

- Fetch the CNAME by running the command:

1kubectl --namespace kourier-system get service kourier

Output:

1kubectl --namespace kourier-system get service kourier

2NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

3kourier LoadBalancer 10.101.236.147 <pending> 80:30556/TCP,443:30311/TCP 110s

Verify the installation Link to heading

Verify the installation with the command:

1kubectl get pods -n knative-serving

Output:

1ubuntuVM:~$ kubectl get pods -n knative-serving

2NAME READY STATUS RESTARTS AGE

3activator-86c78bb8f7-ph7h5 1/1 Running 0 4m38s

4autoscaler-7c84b476b7-lvmr6 1/1 Running 0 4m38s

5controller-7f594656c5-nsnpm 1/1 Running 0 4m38s

6domain-mapping-864fb56bd6-vqpd5 1/1 Running 0 4m38s

7domainmapping-webhook-5c969d8f6b-n4h9b 1/1 Running 0 4m37s

8net-kourier-controller-f8d886c4d-z2vxl 1/1 Running 0 2m47s

9webhook-5d44c4d7d9-8kznn 1/1 Running 0 4m37s

10ubuntu@ubuntuVM:~$

Now let’s see some examples for knative Function and Service.

Knative Functions Link to heading

Knative Functions provides a simple programming model for enabling the deployment of Serverless functions , without requiring in-depth knowledge of Kubernetes, containers, or docker-files.

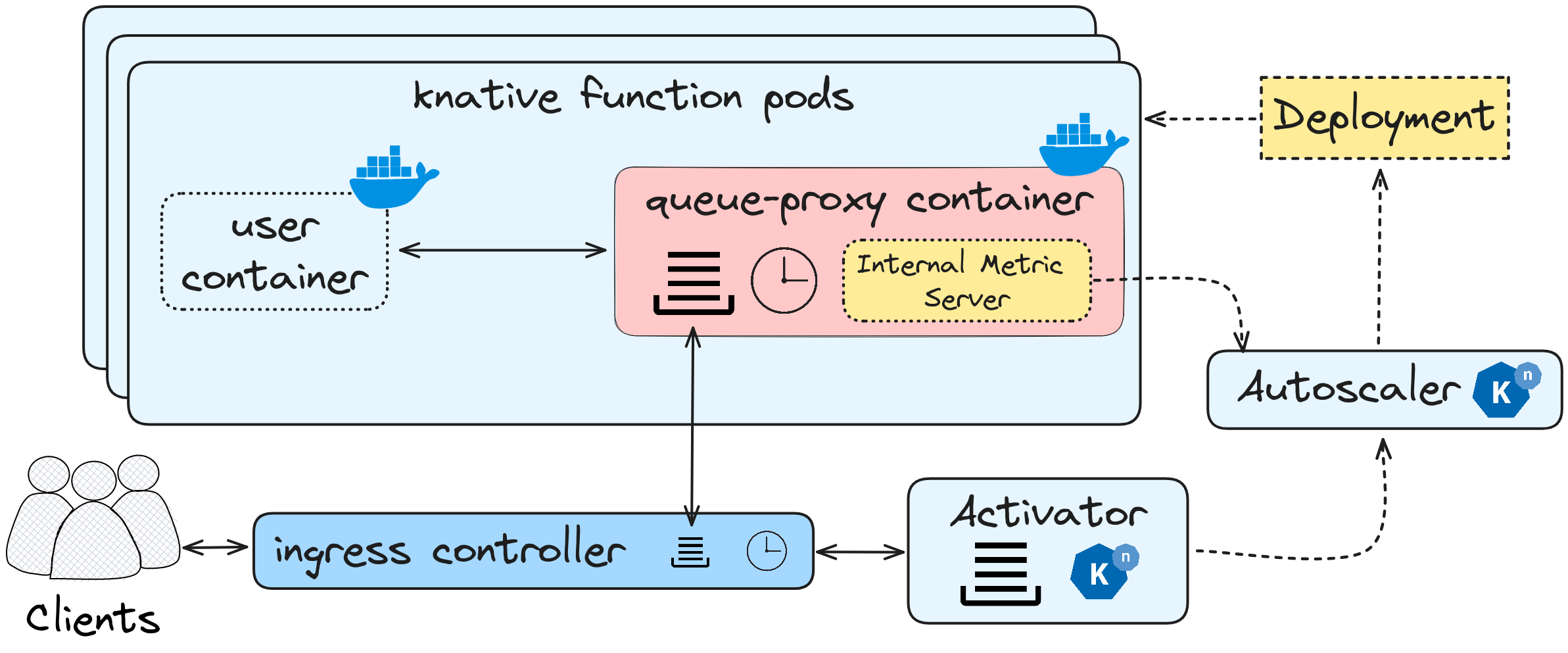

Figure 2: Knative Function architecture

During the first invocation of a Knative Function, the clients send their requests to the ingress controller, which is located outside the Knative stack. The ingress controller acts as the entry point for the requests.From there, the ingress controller interacts with the activator component within the Knative stack.

The activator maintains a queue and handles the incoming requests from the clients.It then communicates with the Autoscaler, which is responsible for managing the scaling of Knative function pods.In the initial invocation, since there is no existing deployment available for the function, the Autoscaler creates a deployment file and spawns a Knative pod.

Inside the Knative pod, there is a qproxy container that plays a crucial role.The qproxy container is responsible for handling metrics, managing incoming requests, and coordinating the responses.It acts as an intermediary between the external requests and the user container where the actual user code runs.It ensures the proper flow of data and communication between the client’s request and the execution of the user code.

For subsequent invocations of the Knative Function, the process follows a similar flow.The requests from the clients continue to go through the ingress controller, which maps them to the queue-proxy controller.The queue-proxy controller, in turn, forwards the requests to the user container where the user code is executed.

Additionally, the qproxy container also collects and pushes metrics to the autoscaler.These metrics help the autoscaler in determining the appropriate scaling actions for the Knative function pods, enabling them to efficiently scale up or down based on the workload demands.

Overall, this flow illustrates how Knative Functions work, from the initial invocation to subsequent invocations, and the role of different components such as the ingress controller, activator, autoscaler, qproxy container, and user container.

Now to install Knative Functions, we execute the following commands:

1brew tap knative-sandbox/kn-plugins

2

3brew install func

Output:

1ubuntuVM:~$ brew tap knative-sandbox/kn-plugins

2Running `brew update --auto-update`...

3==> Tapping knative-sandbox/kn-plugins

4Cloning into '/home/linuxbrew/.linuxbrew/Homebrew/Library/Taps/knative-sandbox/homebrew-kn-plugins'...

5remote: Enumerating objects: 595, done.

6remote: Counting objects: 100% (213/213), done.

7remote: Compressing objects: 100% (36/36), done.

8remote: Total 595 (delta 189), reused 192 (delta 176), pack-reused 382

9Receiving objects: 100% (595/595), 151.20 KiB | 1.12 MiB/s, done.

10Resolving deltas: 100% (404/404), done.

11Tapped 92 formulae (120 files, 383KB).

12

13ubuntu@ubuntuVM:~$ brew install func

14Running `brew update --auto-update`...

15==> Fetching knative-sandbox/kn-plugins/func

16==> Downloading https://github.com/knative/func/releases/download/knative-v1.11.0/func_linux_amd64

17==> Downloading from https://objects.githubusercontent.com/github-production-release-asset-2e65be/242137258/8e91cca8-e0d6-409d-bf03-

18############################################################################################################################# 100.0%

19==> Installing func from knative-sandbox/kn-plugins

20"Installing kn-func binary in /home/linuxbrew/.linuxbrew/Cellar/func/v1.11.0/bin"

21"Installing func symlink in /home/linuxbrew/.linuxbrew/Cellar/func/v1.11.0/bin"

22🍺 /home/linuxbrew/.linuxbrew/Cellar/func/v1.11.0: 4 files, 95.3MB, built in 2 seconds

23==> Running `brew cleanup func`...

24Disable this behaviour by setting HOMEBREW_NO_INSTALL_CLEANUP.

25Hide these hints with HOMEBREW_NO_ENV_HINTS (see `man brew`).

26ubuntu@ubuntuVM:~$

To create a go function run:

1func create -l go knative-function-demo

to confirm that the function was created ,check her directory

1ubuntuVM:~$ cd knative-function-demo

2ubuntu@ubuntuVM:~/knative-function-demo$ ls

3func.yaml go.mod handle.go handle_test.go README.md

Ιf modify the handle.go file like this:

1package function

2

3import (

4 "context"

5 "fmt"

6 "net/http"

7

8)

9

10// Handle an HTTP Request.

11func Handle(ctx context.Context, res http.ResponseWriter, req *http.Request) {

12 /*

13 * YOUR CODE HERE

14 *

15 * Try running `go test`. Add more test as you code in `handle_test.go`.

16 */

17

18 fmt.Println("Received request")

19 fmt.Println(prettyPrint(req)) // echo to local output

20 fmt.Fprintf(res, prettyPrint(req)) // echo to caller

21}

22

23func prettyPrint(req *http.Request) string {

24

25 return " You are a demo "

26}

and run the function (locally) with the command:

1func run ––build ––registry {registry}

if everthing goes well , the output will be:

1ubuntuVM:~/knative-function-demo$ func run --build --registry panosmavrikos

2 🙌 Function built: docker.io/panosmavrikos/knative-function-demo:latest

3Initializing HTTP function

4listening on http port 8080

5Running on host port 8080

In a browser at http://localhost:8080 will see your function running.

1knative-function-demo$ curl http://localhost:8080

2You are a demo

In case we want a production solution, we have to build a Container image and use it to run our function. To build the container image run the following command with your registry :

1func build ––image docker.io/panosmavrikos/knative-function-demo:latest

To deploy our function in a production environment, execute the command:

1func ––namespace default deploy

Knative Service Link to heading

To deploy a simple service in knative make a yaml file like this:

1apiVersion: serving.knative.dev/v1

2kind: Service

3metadata:

4 name: hello

5spec:

6 template:

7 spec:

8 containers:

9 - image: ghcr.io/knative/helloworld-go:latest

10 ports:

11 - containerPort: 8080

12 env:

13 - name: TARGET

14 value: "World"

and apply it

1kubectl apply -f hello.yaml

Output:

1service.serving.knative.dev/hello created

View a list of Knative Services by running the command:

1kubectl get ksvc

Output:

1ubuntuVM:~$ kubectl get ksvc

2NAME URL LATESTCREATED LATESTREADY READY REASON

3hello http://hello.default.svc.cluster.local hello-00001 hello-00001 True

To access the Knative Service, open the previous URL in the browser or by executing the order:

1curl http://hello.default.svc.cluster.local

Output:

1Hello World!

Congratulations!!!